I think 14th gen is doing a 20% uplift. I think it would be better to get a second box do a small ITX build and just let it roll 24/7 at this point because things are getting really fast. Cheap to if you have a good job. (I do)

I’m retired. But I saved some money and bought many toys ahead of time.

I look forward to your tests.

I’m curious to see how it performs.

I unboxed my new Gigabyte GeForce RTX 4090 this morning. It will probably be a day or two before I have a chance to install it in place of my RTX 3090.

I have the Passmark performance test on this machine. Before uninstalling the 3090, I will uninstall all of my OC software and run the benchmark tests. I will then replace the 3090 with the new 4090 and after making certain I have the appropriate (Nvidia) drivers, etc in place, I will run the benchmarks again and I’ll be able to report on the native speed improvement.

whether it will be faster in VEAI or not will depend on whether Topaz has been using an Nvidia driver SDK that already supports and optimizes both 3090 and 4090. The 4090 has a few extended capabilities the 3090 didn’t and is much faster overall. - However, implementing this can take a while. Presently Topaz VEAI has managed to increase GPU utilization and framerate considerably, And they claim they are still optimizing.

When I first put my 3090 into this machine, none of my graphics packages could really utilize it except through the “universal” generic Windows driver. which (back then) made everything overheat and was still rather slow. Since that time, most of the software vendors have integrated the appropriate drivers for the 3090. - I have no idea whether they will fully support the 4090’s potential right away or it will take them a while to update - But we will see soon enough.

Later… ![]()

In theory, ampere should have been the beta test for LL, the architecture is not that different.

Going from 3080 to 4090, I am only seeing a 10-20% increase in v3 (mostly Artemis 1080p or 1080p to 4K). Not sure whether Topaz is better optimized for Intel CPUs, since I am using a 5950 paired with 64GB RAM.

But the 4090 allows 3 parallel processes without much sweat (Topaz needs to allow more parallel instances).

And the power efficiency is incredible, especially when running the card with a slight undervolt like 0.9v @2600Mhz.

At the moment I am running the gaming drivers without problem, not the studio ones. Not sure whether that would make a difference. Might have to try that.

I can power limit by like 50% on my 3080 and still get the same performance. I am happy i am sitting this one out. I hope by the time 5090 launch most of this stuff will be fixed and optimized but i understand they are a small team.

Having just been researching on and off.

VEAI behaves like a normal video app, it is virtually always in the CPU limit.

For this to improve, support for small processors would have to be removed, which can’t be in the company’s best interest, as I think the majority of users are somewhere between 8 cores and an RTX3060/2070.

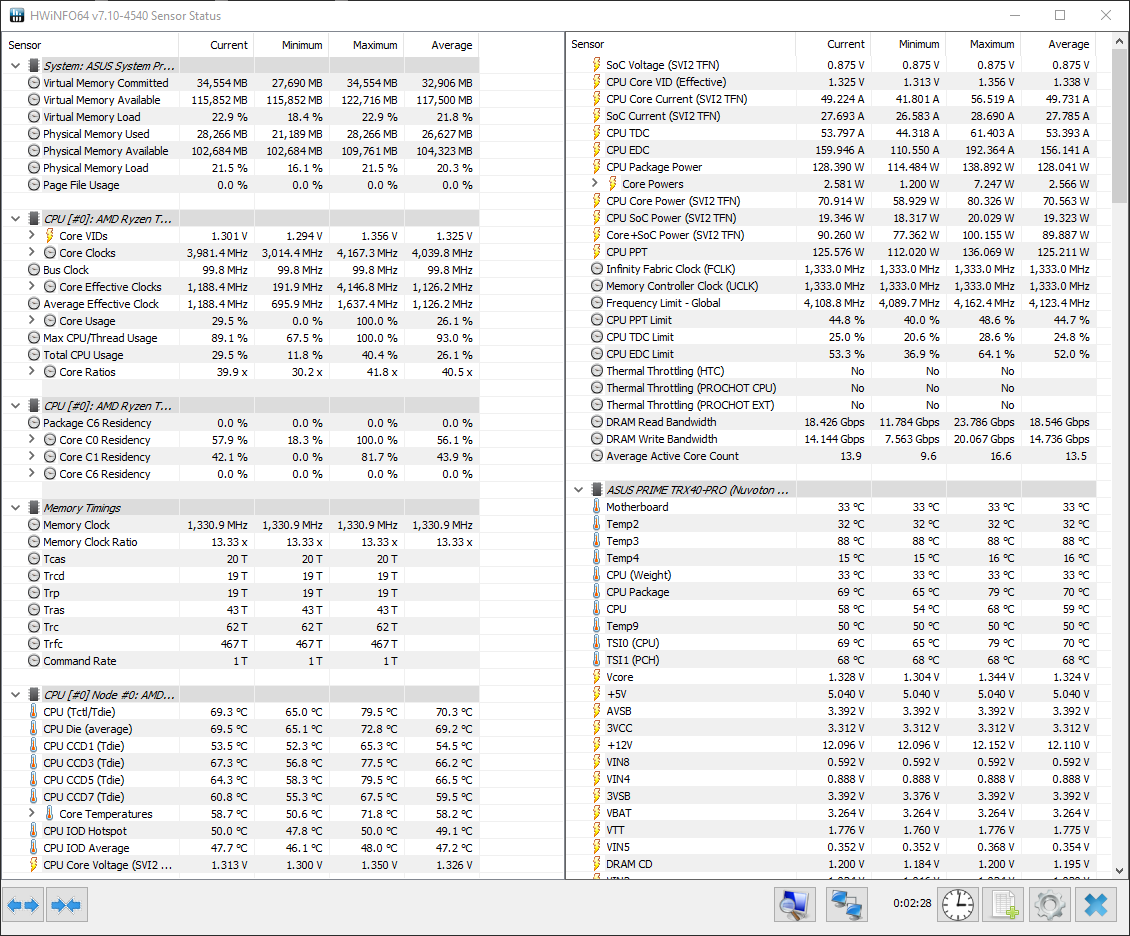

Even with Denoise AI, I have an average core utilization of 10 with my 24 cores.

Every time the GPU finishes a frame, the CPU ramps up to 16 cores.

Denoise AI - CPU & GPU Load

VEAI - Proteus Manual - Video = 4K 1:1 60FPS, mp4, Performance 0.7 fps, Radeon Pro W6800

Average Core usage = 13 Cores.

Ram Read an write is very high compare to my other apps.

Something must be wrong, I can’t even play the video smoothly in VEAI 3.0.0.

Yes, I understand. But I’m not sure the Nvidia SDKs they used can leverage the newer extended capabilities of the 4090. I believe that some of these may be able to enhance the performance and quality of the images.

I have some other graphic software applications that utilize AL to get better, faster results. if there is any improvement there, I’ll

Yes, it seems the CPU is the limit when using one of the more recent higher end GPU models.

Here is a very recent CPU bench comparison of topaz image upscale and 2 other AI related tasks:

https://www.techpowerup.com/review/intel-core-i9-13900k/9.html

I do not understand that the image resolutions are not specified.

This is also crucial for gaming.

i’ve seen that the load is much lower on my intel and nvidia system.

But the performance was the same as with the TR 3960X & W6800.

7820X & RTX 5000

Maybe AVX 512 is used and of course the Tensor cores.

I did write the performance numbers into the google sheet of the intel system.

Update: I did miss that the rtx 3090 is just 8% faster in Gaia than rtx 3070ti, but i don’t see a cpu bottleneck ether.

Hi,

It doesn’t look like this was answered. What is really the best GPU for Topaz Video AI? Would a V100 or H100 perform a lot better than a 4090? I know the price is very high but one could get a VM and pay only when using it. Has anybody tried this? I would really like much higher performance and am willing to pay for it, but don’t want to do that unless I know it would make a meaningful difference.

Like is it a Threadripper 7995wx paired with dual or quad H100’s? (Again, not sure I can get THAT, but asking because I can get something.) I just want to know if Topaz AI benefits from these in a meaningful way.

Thanks.

Good question. I think with an Intel 13th or 14th generation CPU and an RTX 4090 you will get a lot of computing power. The only disadvantages are the price of maybe 4000 EU for the whole PC and that Video AI seems not to perform according to the specs of the GPU yet for an unknown reason.

It’s not just GPU speed. The models have to be optimized by GPU. Topaz has optimized for NVIDIA GeForce and AMD Radeon, but Intel ARCs still don’t seem to work well with the app. And Topaz hasn’t said anything about whether their apps will even recognize AI-specific chips.

If you try this i realy want to know the performance of a single V100 and H100. ![]()

So you are also looking for a faster GPU? I could give you temporary access to my PC to test Topaz products on this machine maybe via Teamviewer if you like. We might ask Topaz for permission before; not sure if this a valid option in the current license. ![]()

That’s fine, thanks, doesn’t have to be.

I would be more interested in the two Pro products, an H100 theoretically comes out at 3x 4090.

I’d say first step is ask Topaz whether TVAI works with the processor.

As far as i know it will work with cli only.

BC when using the ui, the build in GPU with display out will compute the preview (maybe maybe not).

Maybe we ask @suraj if a V or H100 in a VM will work with TVAI and how.

My TVAI PC has two GPUs. One drives the displays and audio. The other is the one TVAI is set to use as its “AI processor,” and doesn’t have anything connected to it. The GPU the monitors are connected to is the one running the UI and feeding the previews to the displays.

This is the same setup you’d have with a non-GPU accelerator card. The difference is that NVIDIA’s drivers for accelerator cards are called “datacenter drivers” and not “graphics drivers.” So the question is, if you install an accelerator card with a “datacenter driver,” will TVAI be able to recognize it and use it as its “AI processor?”

AMD Instinct cards would be in the same boat. Their drivers are called “server accelerator drivers.”