What is the fastest speed you ever had on topaz video enhance ai ? Is there any GPU with 0.01 sek./Frame on upscaling 1080p to 2160p ?

Haven’t used it but it could be MI100 from AMD with 184.6 TFLOPS FP16.

That is 4.6x RTX 3090 ti.

Maybe you could use it by renting a server.

Thats exactly 0.01058 sec per frame for the community video vs 3090.

In theory.

Based on online sources.

RTX 4090 will reach 82 Teraflop fp16.

and RX 7900XT will hit 132 teraflop fp16.

So we will not reach the speed of the MI100 or even the 362 teraflop fp16 of the MI250.

I did notice that the price of the Radeon Pro VII did get down to 1500€ so maybe MI100 for desktops is coming.

But expect this one to cost 5K

The RTX 4090 cards are being released on October 12th. (That is about 9 hours from now at the time of this writing.) The expected price for the Gigabyte “gaming” OC model will be at $ 1699.00 (US) - I’m going to snap one up ASAP. I ended up paying an outrageous amount for my Gigabyte RTX 3090 Vision OC, but it is a very nice card.

4090 50% faster in blender renders. Not sure how that carry’s over to this but give you an idea. I would get one but it will not fit in my case. So I am stuck with 2x 3080

It all depends on who programmed the CODECs in use. I’m certain there are CODECs specifically designed to run fastest on certain classes/brands of GPUs. Unfortunately, I think the ProRes 422 HQ isn’t one of them.

I’ll be putting a 4090 through it’s paces tomorrow, including comparing VEAI upscale times 3090 vs 4090. Supposedly Topaz has both AV1 support and dual-NVENC support in the pipeline - in collaboration with Nvidia - as the new 8th gen NVENC in the 40-series has two NVENC blocks (12GB and above models), which can apparently encode two streams in parallel, or both work on a single stream. Hopefully Topaz releases the update soon.

Now that is interesting what forum will you be sharing the review in?

I had to buy a monster-sized case just to support the 420mm radiator for cooling my CPU. My 3090 has been doing pretty well, now that they are actually writing code that will utilize its capabilities. I know the 4090 I’m buying will be able to fit in it. Even the biggest one that Gigabyte is currently listing will fit with room to spare.

However, some of the newer Intel CPU/GPU units will have some powerful video rendering capabilities. In a year or two, we may not even need a separate video card… I have Intel 10th & 11th gen motherboards with their corresponding i9-K CPUs in them, overclocked, too.

Unfortunately, the 12th Gen looks like it will be the charm. The i9 will avoid overheating my having a CPU chip with a lot more surface area. It will run slower, but will have many more cores the 10th or the 11th did so it will be much faster for multi-threaded application processing. And, it will also have the enhanced GPU capabilities.

Of course the next challenges will be finding reasonably-priced UPS units that can support the bigger PSUs. They can get very expensive.

As for cooling, I am developing what I think will be the ultimate cooling solution. I am planning on building a prototype as soon as it gets cool enough to starting using my garage machine shop. (And my wife gives me the time to do it. ![]() )

)

I think 14th gen is doing a 20% uplift. I think it would be better to get a second box do a small ITX build and just let it roll 24/7 at this point because things are getting really fast. Cheap to if you have a good job. (I do)

I’m retired. But I saved some money and bought many toys ahead of time.

I look forward to your tests.

I’m curious to see how it performs.

I unboxed my new Gigabyte GeForce RTX 4090 this morning. It will probably be a day or two before I have a chance to install it in place of my RTX 3090.

I have the Passmark performance test on this machine. Before uninstalling the 3090, I will uninstall all of my OC software and run the benchmark tests. I will then replace the 3090 with the new 4090 and after making certain I have the appropriate (Nvidia) drivers, etc in place, I will run the benchmarks again and I’ll be able to report on the native speed improvement.

whether it will be faster in VEAI or not will depend on whether Topaz has been using an Nvidia driver SDK that already supports and optimizes both 3090 and 4090. The 4090 has a few extended capabilities the 3090 didn’t and is much faster overall. - However, implementing this can take a while. Presently Topaz VEAI has managed to increase GPU utilization and framerate considerably, And they claim they are still optimizing.

When I first put my 3090 into this machine, none of my graphics packages could really utilize it except through the “universal” generic Windows driver. which (back then) made everything overheat and was still rather slow. Since that time, most of the software vendors have integrated the appropriate drivers for the 3090. - I have no idea whether they will fully support the 4090’s potential right away or it will take them a while to update - But we will see soon enough.

Later… ![]()

In theory, ampere should have been the beta test for LL, the architecture is not that different.

Going from 3080 to 4090, I am only seeing a 10-20% increase in v3 (mostly Artemis 1080p or 1080p to 4K). Not sure whether Topaz is better optimized for Intel CPUs, since I am using a 5950 paired with 64GB RAM.

But the 4090 allows 3 parallel processes without much sweat (Topaz needs to allow more parallel instances).

And the power efficiency is incredible, especially when running the card with a slight undervolt like 0.9v @2600Mhz.

At the moment I am running the gaming drivers without problem, not the studio ones. Not sure whether that would make a difference. Might have to try that.

I can power limit by like 50% on my 3080 and still get the same performance. I am happy i am sitting this one out. I hope by the time 5090 launch most of this stuff will be fixed and optimized but i understand they are a small team.

Having just been researching on and off.

VEAI behaves like a normal video app, it is virtually always in the CPU limit.

For this to improve, support for small processors would have to be removed, which can’t be in the company’s best interest, as I think the majority of users are somewhere between 8 cores and an RTX3060/2070.

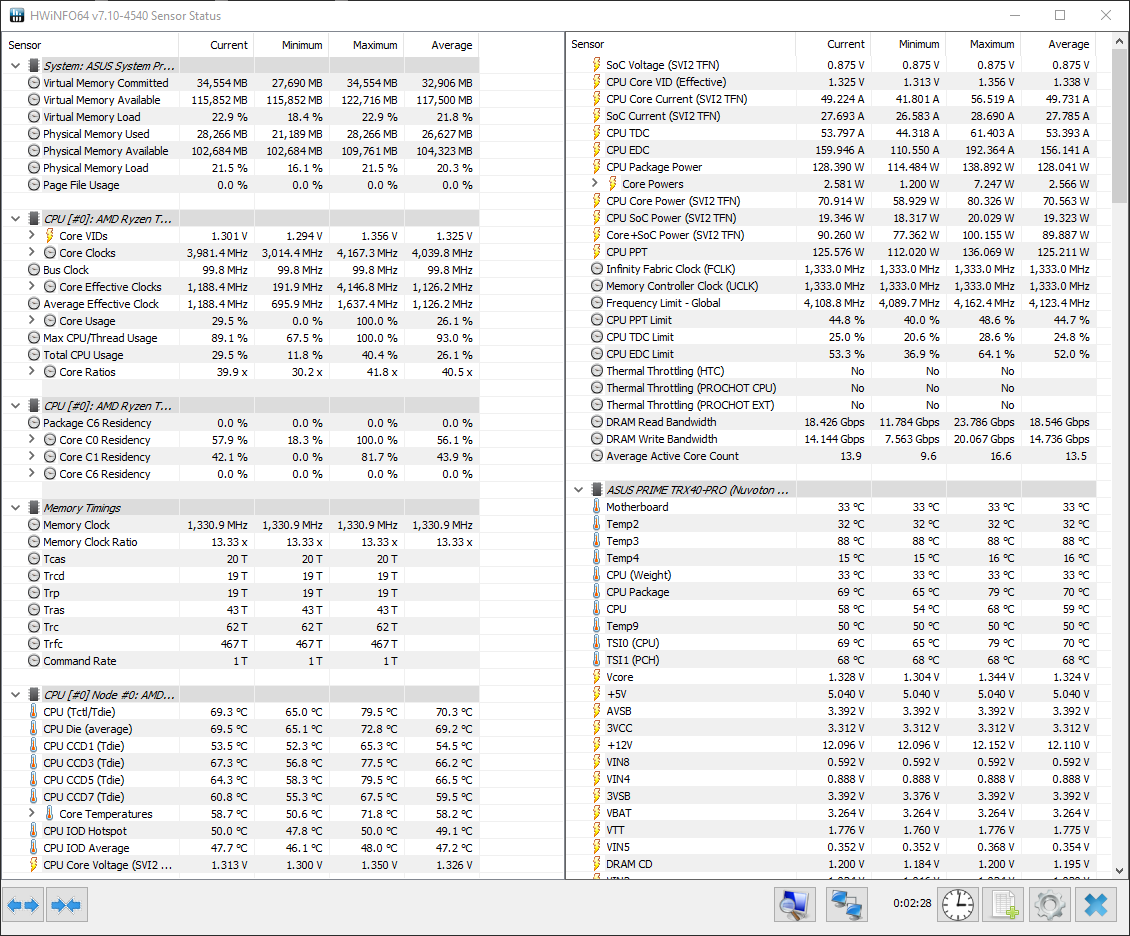

Even with Denoise AI, I have an average core utilization of 10 with my 24 cores.

Every time the GPU finishes a frame, the CPU ramps up to 16 cores.

Denoise AI - CPU & GPU Load

VEAI - Proteus Manual - Video = 4K 1:1 60FPS, mp4, Performance 0.7 fps, Radeon Pro W6800

Average Core usage = 13 Cores.

Ram Read an write is very high compare to my other apps.

Something must be wrong, I can’t even play the video smoothly in VEAI 3.0.0.

Yes, I understand. But I’m not sure the Nvidia SDKs they used can leverage the newer extended capabilities of the 4090. I believe that some of these may be able to enhance the performance and quality of the images.

I have some other graphic software applications that utilize AL to get better, faster results. if there is any improvement there, I’ll

Yes, it seems the CPU is the limit when using one of the more recent higher end GPU models.

Here is a very recent CPU bench comparison of topaz image upscale and 2 other AI related tasks:

https://www.techpowerup.com/review/intel-core-i9-13900k/9.html