Hi, I was using the trial for the topaz video enhance and it was great, the speed was around 0,20 sec per frame and I updated my macos monterey to the latest version and now topaz is very slow, rendering at 2,30 sec per frame. Do you guys know how to solve this? I have a macbook pro m1 2021

I would like to know if there is support for dual CPU systems (like my HP Z840 dual Xeon) in the pipeline? I have noticed that only one of my cpu gets used (cpu 0) 12 physical 24 logical, I could expect a large boost if I could used both cpus, note that running another instance does not make VEAI use the other cpu 1.

If I could make a suggestion from personal experience. The scary face (distortion) is from the ai not having enough frames to work from. I usually use Flowframes to up the framerate to 60fps before treating video files in VEAI. Also turning the slider down for high speed shots helps, with slower static shots you can get away with moving the sliders up.

It seems quite logical to add several things to new releases:

- The restoration of the old film footage in one model or building a model’s pipeline option:

-removal of dirts, scratches and other artefacts.

-stabilization of the footage.

-removing brightness and color flickering.

-color correction, and may be colorization of b/w footage. - Add convertation of the FPS option inside each preset but not only separate render or add a model’s pipeline option.

- The ability to configure more output parameters for each codec. Now, for example, there is no ProRes and ProRes LT(only HQ), no MXF(film and video industry standard), and you cannot specify the more settings for x.264 except CRF, it is not possible to use the codecs installed in the system and coding through FFMPEG.

Thanks

I feel like it’s taking my soul but i can’t look away.

Hey there, trying to understand something since the beginning, but didn’t succed yet (lol).

it seems that depending how much a resolution is, when not “usual”, VEAI can slow down or go faster, depending of some factor.

for example, on my machine converting a 720p (1280x720) to 1080p (1920x1080) with a scale factor of 150% takes 1,21 s/frame

but converting 1184x826 to 1920x1080 at 131% scale take more than double the time.

I assume that to fix this by converting in 1280x720 the 118x826 would fix the speed issue, but just wanted to know why it doing this, and when it’s doing it (so i know which video i must re-convert / crop before sending it in VEAI and which not. Thanks !

If I have a 1280x720 video that is not very clean I will sometimes scale down to 854x480 to get a speed advantage. I will convert to mp4 using a quality of 0 in Staxrip. Then in VEAI I upscale to 1080p(225%) at a CRF of 5. Finally I take that file back in to Staxrip and process to the final size and quality I desire.

The vertical resolution is the driving factor in this case. Also remember that any scale factor over like 125 or 130 is automatically scaled to 200 percent by the AI and then downscaled to your chosen resolution, at least that was the way it used to be. If your working with very high quality videos you might be better off doing the final scaling from 200 or 400 percent in an external program where you have much more control.

Hi mike, yes i know this in big parts, i noticed that the switching from 2x factor to 4x model is happening exactly at 239%. to speed up the process, someone suggested me to stay on the 2x model and that do a conversion of upscaling when it’s close to the result i want, but i noticed that a lot of time, the x4 model even when it was downscalling after to go back to 1080p (like a 279% scale or even 300 one), was making a much better result. but i’m playing with “hybrid” since few days (the software), and there is a big work that can be done in such software already in term of denoise etc, and which can make the upscaling better, as the quality source matter so much in VEAI.

so if how you tell, it’s the vertical resolution the driving factor, i’ll try some test. i sometimes as well downscale too to 854x480 etc… 960x540 too. so i’m curious to know at which point in term of vertical resolution, the software slow down or go faster ! i’ll have to do some test so can harmonise better the workflow ! (thanks !).

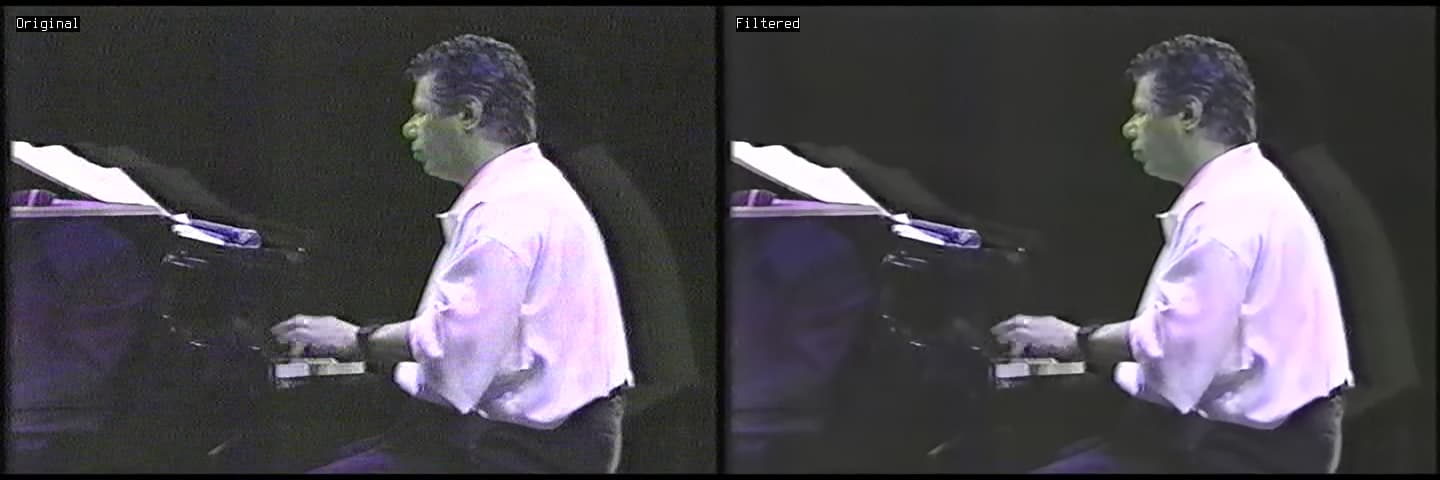

here is an example with a very Bad vhs , recording in ntsc, transfered in Pal (lost the color of course), but it was just to see if in term of denoise, i could make something better to feed VEAI. so all this worth it to be investigated !

One thing is for sure I don’t think there will ever be a time in the near future when the process will be click a button and the AI figures everything out on a single consumer grade computer.

Have you heard of videoINR model? Do people use it to learn? How about the effect?

VideoINR’s setup is giving me issues due to compiler and pytorch errors on Windows with the latest MSVC + anaconda as suggested. I am going to downgrade my CUDA to 10.2 from 11.x and see if that works.

In the ‘Closed issues’ section on their Github page, someone was able to supposedly get it running in Google Colab, but you will need at least a T4/P100 GPU for decent speeds on larger videos I’d expect. If I can get it working, I’ll do a 4x upscale comparison between Big Buck Bunny 480p with VEAI

Great professional! Good Job

Avast, matey! False positive despite, it took AVAST 5 hours to decide VEAI was allegedly malware?? ![]()

For some time now, Avast has been making of the shit. For my part, I had uninstalled it because it prevented the connection of my Wifi key or the file transfer between my tower and my laptop. Each time I had to put the block in question in the exclusions or disable the Avast firewall but it managed to bypass each time there was an update of Avast and I had to start again.

Since then, I use again the basic Windows anti-virus and Malwarebytes and it is good

Thought this might be interesting to some. I just built a third AMD Ryzen computer with some cheap used parts. I had to repair a single broken pin on the CPU which I have done many years ago. This new machine has a Ryzen 7 5700G APU with 16GB of the 32GB of ram dedicated to the Vega GPU section and I added my old 6GB GTX1660Super also.

The other machines are a Ryzen 9 3900x with a 8GB RTX-3060Ti and a Ryzen 5 5600x with a 12GB RTX-3060 non-TI.

Here are the numbers for a 480P-1080P upscale(225%) including the selection of ‘All’ with the 5700x/1660.

5700g-GTX1660Super

All-0.26sec/frame

GTX1660 Super-0.34sec/frame

5700G-Vega-0.40sec/frame

5700GCPU-1.92sec/frame

5600x-RTX-3060

RTX3060-0.12sec/frame

5600x-2.05sec/frame

3900x-RTX-3060Ti

RTX-3060Ti-0.11sec/frame

3900x-1.19sec/frame

I tried image sequence > video: the problem is that VEAI realizes video from images only with 30p frame rate. Nothing 25p or anything.

Having only a sequence of photo(grams) in input, why is there no option to choose the frequency for the video?

Obviously an original video at 25p if it is rebuilt by video> photo > video at 30p, increase the speed … ![]()

I’ve encountered that problem myself. My solution, as I recall, was to run the output video through VirtualDub, exporting to uncompressed AVI with the output framerate set to what it should be.

Anyone else having the program crash each time you try to import a video to process either through dragging it on to the interface or when using the browse/select file feature?

Error report is too long to post.

Have restarted and reinstalled and still getting the same error.

Seems to be some of my source files doing it. Oddly enough anything I imported today to my computer is having issues but older files are not. Will keep digging but still curious if anyone else has ever encountered this issue.

I had a similar issue with some uncompressed AVIs a while back. Stripping the audio out and just importing the video streams did the trick.

3 months no update… you guys serious?