With the Quadro M2200 of the Lenovo laptop workstations we are using it takes 1.5 sec per frame - so nothing to waste on TV series. Currently we are remastering source footage from a feature film we shot in 2004 in SD. I have initiated the remaster out of curiosity and to find and learn new ways to treat old archive footage. I’ve done that since many years with varying success but this time the results are so good that I am really excited about the possibilities.

Source footage on tapes for that movie would be over 80 hours but in the end only the used takes get upscaled which might be around 4 hours of footage. A take can be 20 seconds long, or several minutes, depending on the scene we shot.

Then the harder part of the remaster comes - redoing all the VFX and regrading the whole scenes.

It’s a labor of love and passion and commercial suicide ![]()

I see.

To come back to my concern, I think I found a solution. I installed MSI Afterburner that “daniel_1188” advised me. Precisely my graphics card NVIDIA RTX 2080 and by MSI. I adjusted the fan so that it does not exceed 65 °. Because before that, it often climbed to 80 ° with spikes to 84 ° at times. it makes a little more noise but now it seems stable because I have variation between 62 ° and 65 °. Never higher.

I hope that with this method I will not have any more crashes system.

I have RTX 3090 and i7. Case fan: 4 IN / 1 OUT 140mm 1000/1200RPM. Enermax ETS-T50A-FSS 140mm 1000 rpm - 5 slot totally opened (OUT air flow).

I use two instance. I just made some works that during over a week continuated, without any interruption. With Cpu 80/100%, and or the same for gpu. RTX 3090 (palit rock) have only driver nvidia and nothing else.

Thank you, I’ll take note. I only installed it yesterday. So I haven’t experienced all of them yet.

On my Windows 10 Pro v1909, I have not yet had the 20H2 upgrade. I’ll wait for it to come automatically as I always get cumulative updates. This means there must be some preparation behind it for the next big upgrade.

Hi,

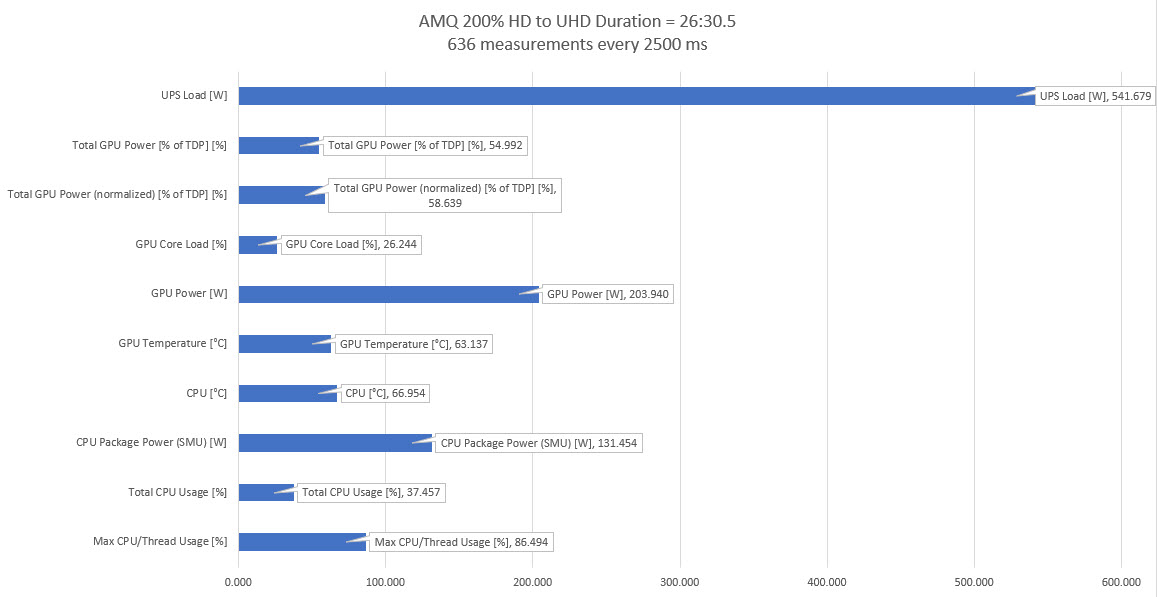

I did a test with HWiNFO to see how VEAI makes the Hardware work and particularly an RTX 3090 on Win 10 with my power options setting is on "Optimal performance which offers maximum performance. Driver: Nvidia Studio 461.72.

I took an HD clip, duration = 4 min 27s 250ms and encoded to UHD. If the CPU seems very used, the GPU is also very used because during the 636 measurements, it happened that 245 times the GPU is in Performance Limit - Max Operating Voltage, which means:

that the GPU essentially shuts off the power there when the card senses that its safe limit is voltage. The means of use is the card used to the maximum at this stage.

Maybe an optimization on the use of the GPU is possible or not but I find that it is not so bad.

In the meantime, provide a good PSU when we know that between the real wattage (here average 541 watts) and that to be expected according to the loss of 10 to 20% between “platinium” and “80 plus”.

Hope this help.

Hi,

I think that all the cumulative updates that you install are already integrated in version 20H2 and in addition include other updates only compatible with 20H2. It’s your choice to wait, no problem for me.

Yeah. It’s true they are identical. I have the same ones on my Windows 10 home 20H2 laptop. I made a folder to get the setups of the cumulative updates with 2 subfolders. One for the 1909 and one for the 20H2. It saves me from downloading them again if one day I have to reinstall Windows.

Since I installed MSI AfterBurner, my PC looks more stable with the use of Video Enhance. I manage to stay between 65 and 68° on my GPU. This is much better because before, I was between 79 and 80° and sometimes between 80 and 84°. always, since version 2.1.0 and 2.1.1, the software is even more resource-intensive. That’s why I had repeated bluescreen.

Another point, the button “Load last auto save” does not work since 2.1.0. Before I had a save file in “C:\Users\user name\AppData\Roaming\Video Enhance AI” which allowed to find where I was stopped to resume later. But here, there is no more file which is created at the time of the launching of a video improvement.

Btw the RTX 3000er needs good PSU and I’m not sure if it’s fixed, but at launch the cards were instable if they boosts over 2ghz…

I know that you have a 2080 card, but If you get crashes again, you can try to reduce the gpu clock maybe 50mhz or reduce the powerlimit of the gpu if you can. I think it’s not only a temperature problem but it could also be a problem with power consumption.

My RTX 3090 needs 350W at 100% load, if I use it with other AI software ![]()

Hey guys, how does the Gaia CG model hold up in this latest build to 1.6.1?

I read some things online that the quality has decreased after the 1.6.1 build.

Windows manages that far better now. The ones you have saved wouldn’t be relevant anymore and would be packaged in with “new” updates you would get when you install. Not to mention, you’d be using a freshly downloaded copy of Windows 10 that would likely have all those updates in it.

TLDR: Absolutely no point saving updates in a folder. Done properly, Windows is almost totally up to date at install.

Actually no.

e.g. if you have a 25 fps video an you get 5 fps, it is easier to multiply the minutes of the video by 5 (25/5 = 5 times x realtime) to get a fast estimation in your head.

The same goes for any fps.

The reason is simple. With fps you are dealing with very small numbers, easy to calculate in your head. That’s why everybody uses FPS instead of sec/frame.

If you want to be precise and use a calculator, there is no difference between FPS and Sec/Frame ![]()

EDIT: Also, with fps you always know how close you are to realtime processing and adjust accordingly. With sec/frame makes no sense whatsoever.

Would it be extremely difficult to simply make FPS or sec/frame something that can be toggled in the GUI that would appease all customers?

Exactly. A simple button on “preferences” would be enough for anybody.

Actually no.

e.g. if you have a 25 fps video an you get 5 fps, it is easier to multiply the minutes of the video by 5 (25/5 = 5 times x realtime) to get a fast estimation in your head.

That would be true if VEAI would show you the duration of the video - but it only shows frame numbers.

I pretty much prefer the Sec/Frame as this is the same with most professional software I am using like for example After Effects or Blender. For long renders I find it much more informative to see how long it will take to render per frame.

But as this is a matter of personal preference I also would like to see a toggle for what to display. ![]()

I always liked the “x realtime” feature of After Effects, hated the sec (or minutes or even or days during the 90’s on Amiga)/frame on LightWave.

But, as you and shodan5000 said, it is a personal preference thing.

So, we ask for a button! LOL

Oh the good old days:

Lightwave 3.5 on Amiga 1200 - a 12 sec animation in SD took me a week to render

![]()

Gaia used to be really good as you said. But the Artemis models are significantly a lot better than they were before.

A user here made a really good post on what models to use for certain types of media. You can check it out here.

Video Enhance AI v2.0.0 - Product Releases / Video Enhance AI - Topaz Discussion Forum (topazlabs.com)

You can find it here with more in depth information.

VEAI Models + Performance Guide