Maybe they are genderfluid? ![]()

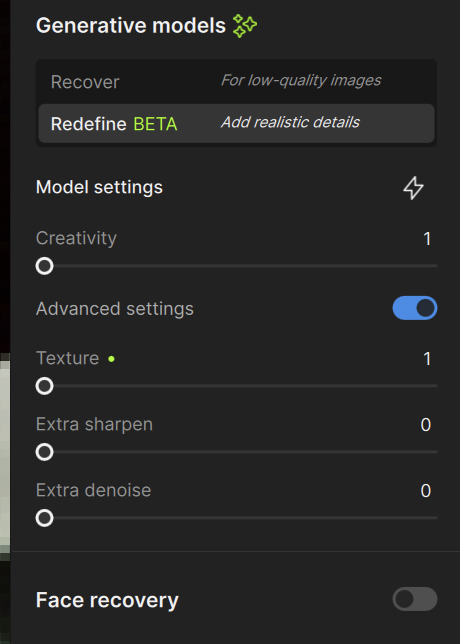

yes and also stay tuned ![]() Redefine may not be the best comparison, but it is the nearest for image right now

Redefine may not be the best comparison, but it is the nearest for image right now

Wicked! I’m waiting eagerly! ![]()

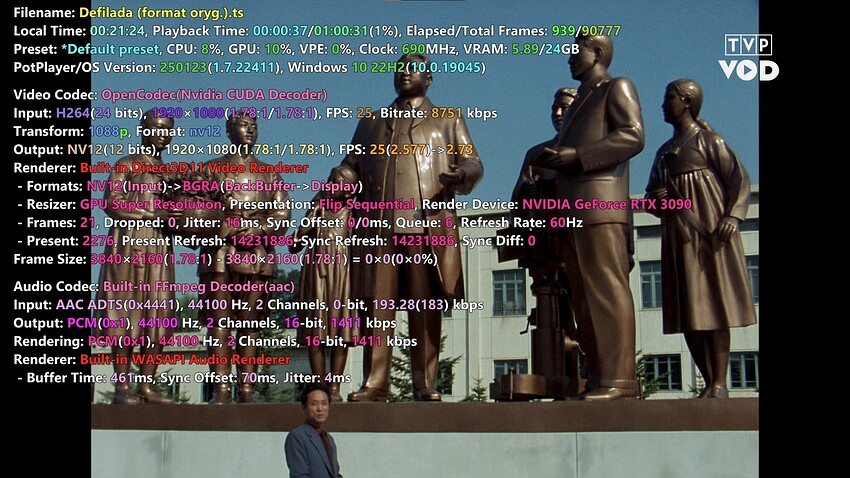

Forth attempt with ‘no parameters’:

It’s not bad, actually! But. ![]()

Yeah, Starlink, err…, Stargate, drat, Starlight rocks. ![]()

By the way, I have this video as that low resolution FLV container, but also as 6GB negatives-scanned 1080p video, before and after Polish studio’s digital restoration, so I can see, how the models fare.

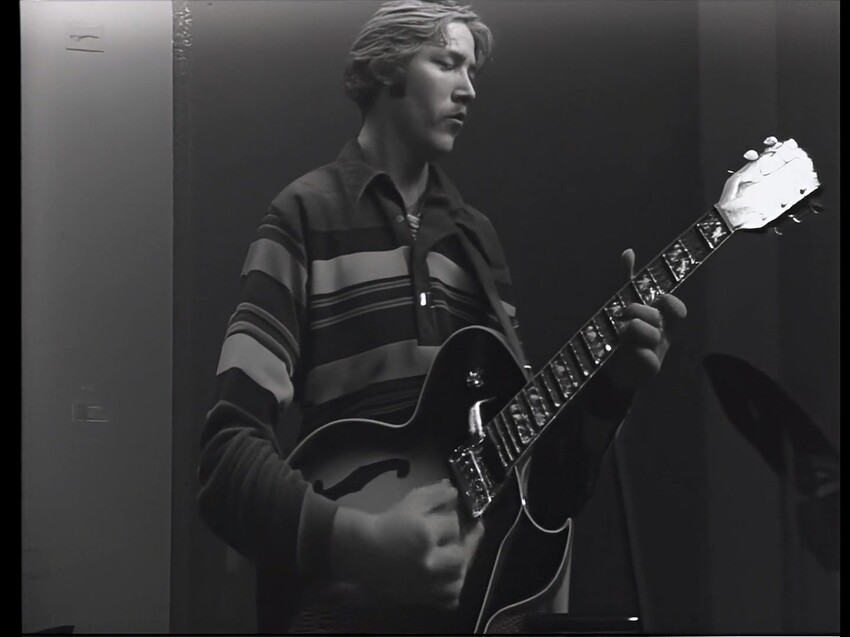

This is a frame from not-touched video, other, than being scanned:

I wonder, if the videos, compressed one and scanned, could be used to tame the ugly compression artifacts? ![]()

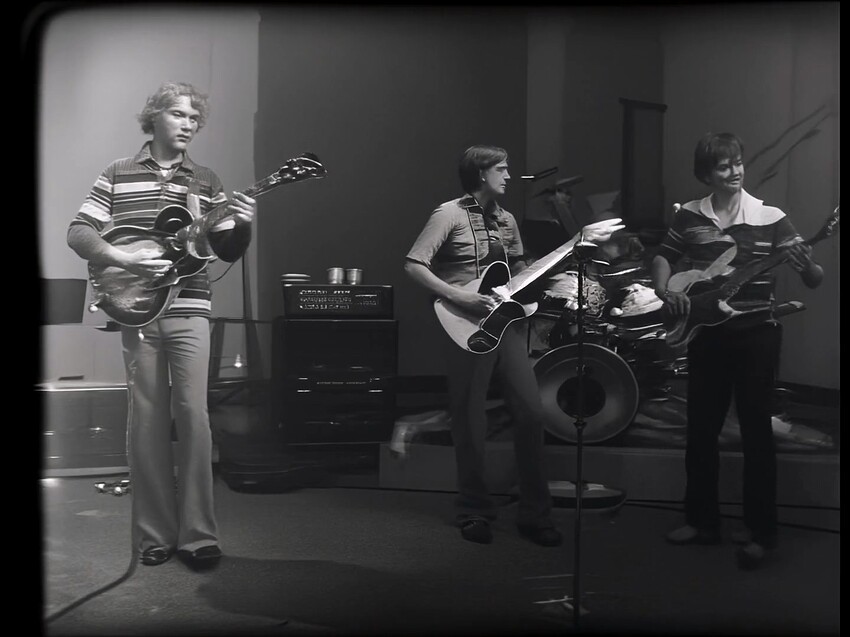

WOW! ![]() OK, I threw my real problem video at it, and on medium shots it looks amazing, one wider shots there is the ‘face’ problem. These were 18yo dudes in a band, and one of them looks like the Paul McCartney of today on his worst day!

OK, I threw my real problem video at it, and on medium shots it looks amazing, one wider shots there is the ‘face’ problem. These were 18yo dudes in a band, and one of them looks like the Paul McCartney of today on his worst day! ![]()

His shirt looks excellent! Guitar pretty good (better than it was shot)

This looks a lot like him. (and way better than it could’ve been in 1979 ish)

And there is this. Kinda unusable.

The GIGO principle still applies, no matter how advanced the model is.

However, we didn’t subscribe to yearly updates to get some new UI features. We subscribed for new models.

Now you’re using that money to build features for Cloud users only, and demand that we pay an additional extra for it.

Maybe so, but they are closing the gap!

Here’s the medium shot I was using. It was recored on 1/2 reel to reel video, in the days before beta vs vhs. I guess this is all they had a CSUN in 1978-79, not even VHS and in black and white.

So, GI-but maybe better out.

We released four new models for desktop processing in 2024, so it’s a big jump to see this as the moment local processing goes away.

The progress made through Starlight will benefit all future video model releases, even if the current Starlight model cannot run on consumer devices.

Would love if you could define that a little more.

AI is here and running large models locally is not uncommon.

While my powerful desktop with 4090 don’t have that much ram, Mac machines have 96 and 192 GB of VRAM even if they are slower.

Don’t use the term “Consumer hardware” just to push people over to pro or cloud licenses. We may have powerful hardware for other use even though we’re regular consumers of video software.

Is it really that it can’t run, or just that it would be incredibly slow? How long would an RTX 4090 take to run that 10 second preview that needs 20 mins in the cloud?

Pro and Standard license users have the exact same Starlight access right now, it is purely about system requirements in the current version.

Yes, right now we could not offer local desktop processing. The model does not run at all, not a situation where we’ve decided certain cards are too slow but technically working.

Honestly, I wish they would provide the information by saying, “Due to the model’s VRAM requirements being several gigabytes, it is difficult to run locally.” Since some people might even set up an H100 cluster at home, simply stating that it is impossible without offering any details about the VRAM requirements will not convince the beta testers here.

Yes right now the model is designed for the H100.

So it turns out that it requires 80GB of VRAM or more. Now I’m convinced. Even if someone had a consumer-grade RTX 5090, the 32GB VRAM limitation makes it clear that 99.999% of users wouldn’t be able to use it. Thank you for providing the specific requirements.

That’s correct for the current Starlight model. This type of model can (and will) be simplified and optimized to require less memory.

Since I know that using a larger model can yield better quality, I own an RTX 4090; however, I have always regretted that the topaz model uses less VRAM. For the sake of achieving better quality, the cost of GPUs, electricity bills, and extremely slow processing times were never a concern for me.

Now, I am delighted to learn that topaz is interested in a very large model that utilizes a high amount of VRAM. I am always grateful to the Topaz development and research team for their hard work and I look forward to the day when I can run the low-cost version of startlight locally.

Not interested at all in cloud based rendering. Not a fan of the direction of this company.