Today’s release is a very important moment in the history of Topaz Video AI. We are releasing Project Starlight, the first-ever diffusion model for video enhancement.

This is a significant evolution over our existing models, and it’s the single largest increase in model capability since Video AI launched.

Even the most challenging footage can be enhanced with Project Starlight. So please, try out the “bad” footage—footage that may have previously produced artifacts with current models.

Because of its size, this model is significantly slower and more expensive to run than previous models, and requires cloud processing on server-grade graphics hardware. But this is just the beginning. Our mission as a product and research organization is to advance visual quality to the furthest extent possible—it just takes a little more processing power to get there right now.

We see a clear path towards expanding access to Starlight and other models of this generation to very high end desktop GPUs, and Project Starlight is just the start of a new series of models.

Project Starlight Research Preview

Active Video AI users can currently process three 10-second weekly previews of Starlight for free. Results will be viewable through unlisted, shareable links with the new “Compare” button in Video AI.

These previews take about 20 minutes to render if servers are available immediately, and all exports are set to 1080p.

Starlight Early Access

After testing for initial server capacity, we’ll be enabling up to 9000 frames of processing in a single export using cloud credits. We are currently pricing this service under cost, and working to offer more usage at lower prices.

Our research team is eager to receive feedback and discuss this step-change in AI video upscaling. We appreciate your involvement on this journey to Video AI’s next frontier.

Project Starlight announcement on X

Project Starlight is now also available in the Video AI web app.

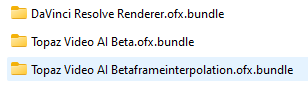

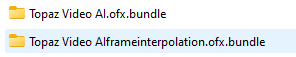

6.1.0.2.b