![]()

This is the result for 10 seconds of encoding after 40 minutes of cloud processing? Honestly I am really disappointed!

![]()

This is the result for 10 seconds of encoding after 40 minutes of cloud processing? Honestly I am really disappointed!

A little ST:TNG clip for y’alls to compare:

That’s a good idea, and something already possible. Sending fewer frames and using one of our Frame Interpolation models could save considerable time.

It’s not bad, considering the amount of data needed to train such model from the scratch.

Wow, the model can discern the foreground from the background already.

The scene complexity for your clip is extreme. I think the results are pretty good tbh…

I strongly disagree–here is the result with the “RHEA” model starting from the same source…

Rhea not as good - has the artificial look that I don’t see in Starlight.

Of course, of course… But I honestly expected a much more extreme result: moreover, Rhea is in 4x upscaling mode, with a resolution of almost 4K, while the Cloud video result is limited to 1080… Not to mention the huge difference in speed locally… This new Cloud model be has a long way to go!

Why?

It’s quite good actually, being a bit better than IrisMQ on the foreground IMO but extremely so with the background, especially NOT doing crazy things to the little faces on those monitors (which is a BIG downside of all those face recovery models we had up to now):

When you compare this to the Rhea render:

I’d definitely prefer the Starlight version as it has those additional details and sharpness but doesn’t yell: “I’m an AI upscale” at you already in the first few seconds.

Also, Neo has that “stamped into the image” look in the Rhea upscale.

And this is on a data center probably running multiple H100 GPUS. Multiply the desktop time by say…at least 10x. So we are back to 6 days running a 4090 or 5090 card 24/7 to process a 45 minute show. The electricity bill for that alone…I think we really are done for processing video locally…at least for Starlight. I hope I’m wrong though!

Wouldn’t really matter much here thanks to solar energy…

For 99% of people, it matters

Blasphemy! ![]()

No, you’re right

Like we’ve mentioned, local processing is definitely still a top-level priority for us. With next-gen models like Starlight, we expect most of the processing of shows to happen through on-premises server deployments like we offer for companies using our current models. This would then be streamed to audiences as a pre-rendered result from the model.

As hardware becomes more powerful/affordable and we continue to optimize the software, this will be easier and easier to run on consumer devices.

We’ve seen the same expansion of availability happen across VFX, gaming, simulation, CAD, and other industries, so we’re excited to see what we’ll be doing with server hardware in the future since it’s an indication of what’s possible for all of our users.

I thought Redefine model would provide some sort of similar result… ![]()

I’ll test it on that frame for the sake of curiosity! ![]()

Yes, this is something people have requested from Iris and later Rhea since it was released - to just process the foreground in-focus objects and not artificially sharpen the background, esp. not faces which should stay out of focus.

I’d be extremely happy if it was possible to transfer only this feature into an updated Iris for local processing.

Like Rhea but differentiate between foreground and background! Yes please!

The video has the following parameters:

Original image:

Gigapixel AI v8.0.2.11b with these parameters:

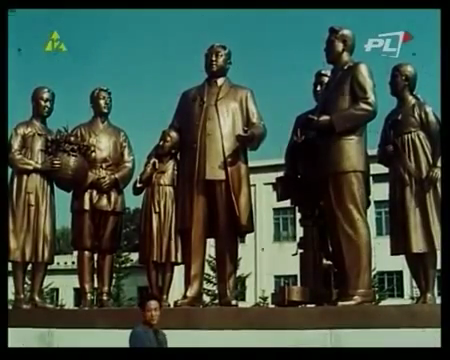

First attempt - the curator changed genders! Dear Leader Kim Ir Sen got some ‘true hair-do’. ![]()

![]()

Second try - the curator still wants to be a girl. Oh well, prompting time! ![]()

Third attempt and finally the curator is a male! But Kim Ir Sen became more manly, too. ![]()