Hi all, fun fact, marginal performance improvement with a 4090 on 3.0.8. Using proteus on a 3090, 720p no upscale 9FPS, with the 4090 10-11FPS. No surprise to anyone I’m sure (I wasnt expecting a massive difference), but there you go. ![]()

I prefer stay with RTX 3090, the 4090 is very bad for me !

Maybe i buy RX 7950XT soon ![]()

When you try bigger resolutions? Like 1080P to 4K?

I think 8K is pointless.

I’m actually surprised at such little performance improvement given the power of the 4090. I really hope this reflects the current lack of optimization rather than hardware limitations. If that is the best the card can do then it is a bit of a let-down as far as up-scaling.

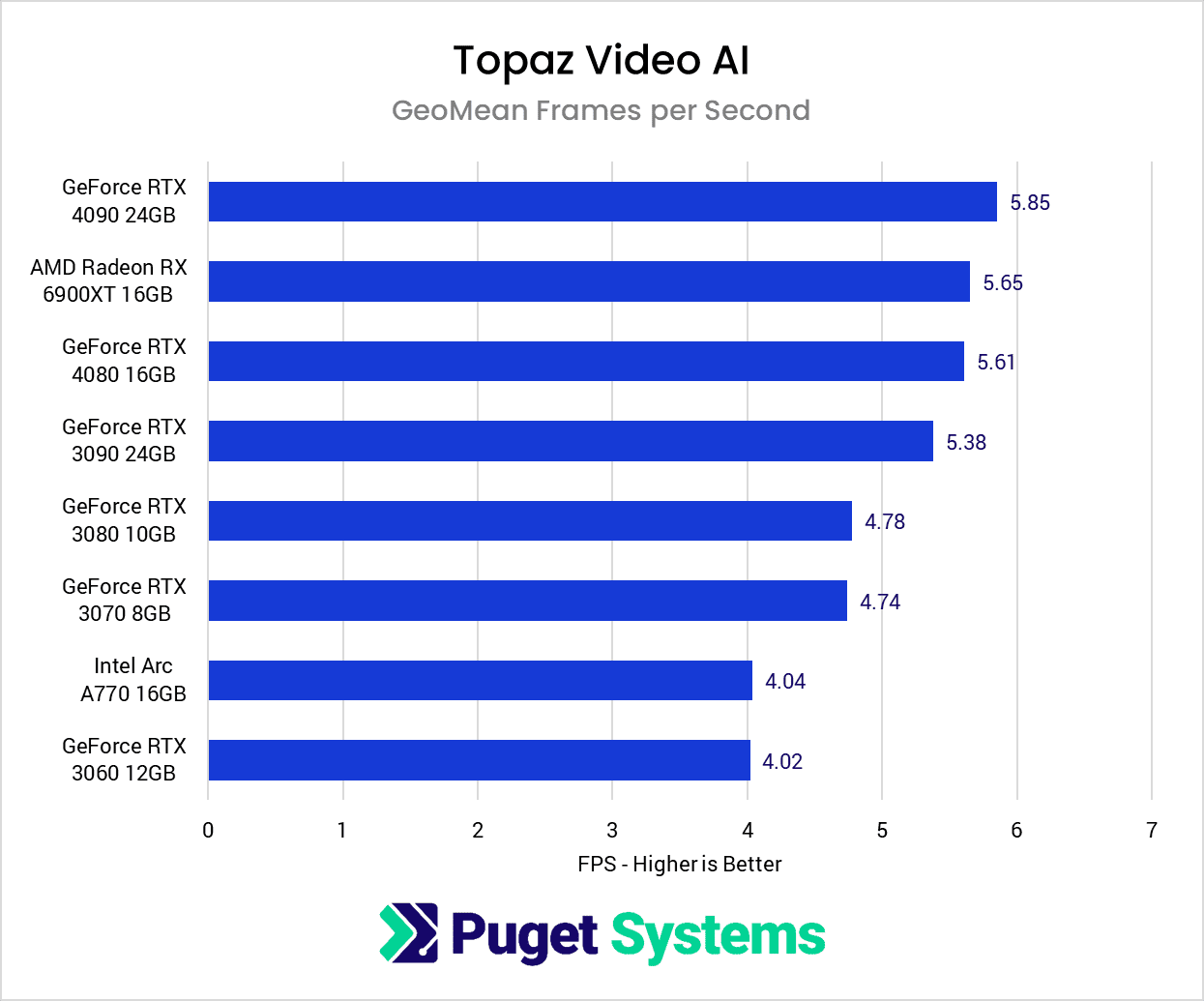

VEAI works much better with AMD due to Topaz optimization for AMD GPUs.

Would you guys try 1080p30 to 4K60p? I think it’s a more common use case.

I am currently running 3x 3090. Is it worth upgrading to 7950XT or 4090?

Fun question, where are you getting 3.0.8 from?!

Could mean 3.0.0-8, the final early release candidate prior to 3.0.0 IIRC?

it is without a doubt caused by optimization. My 4090 is barely reporting to use more than 5-20% in Topaz Video AI 3.0.4 with relatively low speeds but I was able to get it to run at 100% briefly for a few days and the speeds were quite good.

It’s also not an issue of software misreporting the utilization because I could hear coil whine when the GPU was working at 100% previously which it is never doing now in Topaz Video. I also had a few days of poor performance in Gigapixel/sharpen/denoise but those have gone back to decent performance and I can hear the coil whine while using them again.

1080p25 to 4k50p is 0.67sec/frame and 5-9% utilization. Previously I was getting considerably faster with the exact same input file in this test when it would use 100% of the 4090.

IMHO, the ONLY way to judge TVAI utilization of your GPU is to use something like GPU-Z. The Windows Task Manager, and most monitoring utilities, calculate load on the entire card while Topaz really only exercises the Tensor cores, encoder/decoder and VRAM.

In general, LONG before Topaz maxes out the process nodes of the graphics card, you will runout of VRAM. I have a EVGA 3080Ti Hybrid w/12GB of RAM, and my most common use case at the moment is upscale of 480x640 DVD originals to 4K. The 3080Ti I can run a dozen simple FFMPEG compression tasks and still have processor and memory to spare. However, when I run upscale jobs I max out the VRAM after 4 simultaneous sessions, while still only putting a 30% overall load on the card.

On a 4090 w/24GB of VRAM you may get 8 sessions running, but you still won’t get the utilization up over 30 or 40% - but your VRAM will be almost 100% allocated.

Performance is not just about the video card either. As I type this I have four 480->4K jobs running. Each job is running at around 2.8 fps. If I run only one of them, it runs at around 5 to 6 fps, not 11 to 12. Overall performance depends on how many threads are being executed, and how much the work can be done by the GPU vs. the CPU (which is influenced by models in use, how much can be done by the video engine, the CUDA cores, the Tensor cores, and the dedicated encoder and decoder hardware).

I take your point but I have experienced TVAI using 100% of my 4090 in this exact same physical setup and creating coil whine noises while doing so, something in software changed (between either Topaz, Windows 11, Nvidia drivers, whatever) and now it’s slower, showing less utilization, and no coil whine.

I was briefly able to set maximum performance in the nvidia control panel and get 100% utilization in some Topaz workloads but now whatever I do I cannot recreate it but I do have times / screenshots from while it was using 100% so I’ve seen bigfoot and he exists, the 4090 can be utilized fully and get between 0.06-0.09 sec/frame and absolutely scream in coil whine while doing so.

There are also some jobs where it would be quicker to run just the framerate conversion first and then the enhancement afterwards. Where it will be 0.21 sec/frame for each job compared to 0.81sec/frame when they are done together, while before this job would have been doing both of those operations at the same time in a lower combined time while using 100% of the 4090. It’s 100% a software issue somewhere along the chain

I’ve also just upgraded from a RTX 3070 to a RTX 4090 primarily for performance gains within TVEAI but am seeing only slight improvements.

While the RTX 3070 with 6GB VRAM was utilized up to 100% with TVEAI, the RTX 4090 24GB Vram basically idles at 25% TDP and 21% GPU load

(all values reported by GPU-Z)

VRAM Usage stays BELOW 6GB even though the card has 24GB…

Any Ideas?

Aha, interresting! ![]() What is the best AMD gpu for a 500w psu? checking out some Nvidia atm and found that 1660 Super / ti will work well on 500w.

What is the best AMD gpu for a 500w psu? checking out some Nvidia atm and found that 1660 Super / ti will work well on 500w.

as far I know Topaz is based on Openvino.

docs.openvino.ai/latest/index.html

That is an invention of Intel.

The benefit, is a very low resource multi platform support of a wide range of systems.

But the downside is a very week performance of powerful systems like high end graphic cards.

Openvino performs better on AMD cards then NVIDIA. Thats not on purpose, it could be connected to the much better Floating Point 16 bit performance of AMD.

But in general Intel is not optimising for theyer competitors and that’s why Openvino works great on Intel GPU´s and very poor on any other.

Hand optimisation for RTX or RX is double work.

It seems like a conflict of interest. Professionals want to run Topaz on 4090 with max speed.

But Topaz wants to sell their tools to a max audience investing in a platform which runs even on a intel laptop GPU or CPU.

I would wish for a Vulcan version of Topaz - that’s quite wide range support and performs much better on high end GPU´s.

Video Ai v3.0.11 on first inspection has given me back the good 4090 performance.

Coil whine and 70%-100% 4090 utilization and 0.12spf on the Black Desert online Wheel 2x gaia CG test. I also have a lot going on in the background so I’m sure my “PB” numbers from before will be matched in the right conditions.

how is the peformance improvement compared to RTX 3090?

Update: all of this is outdated!!!

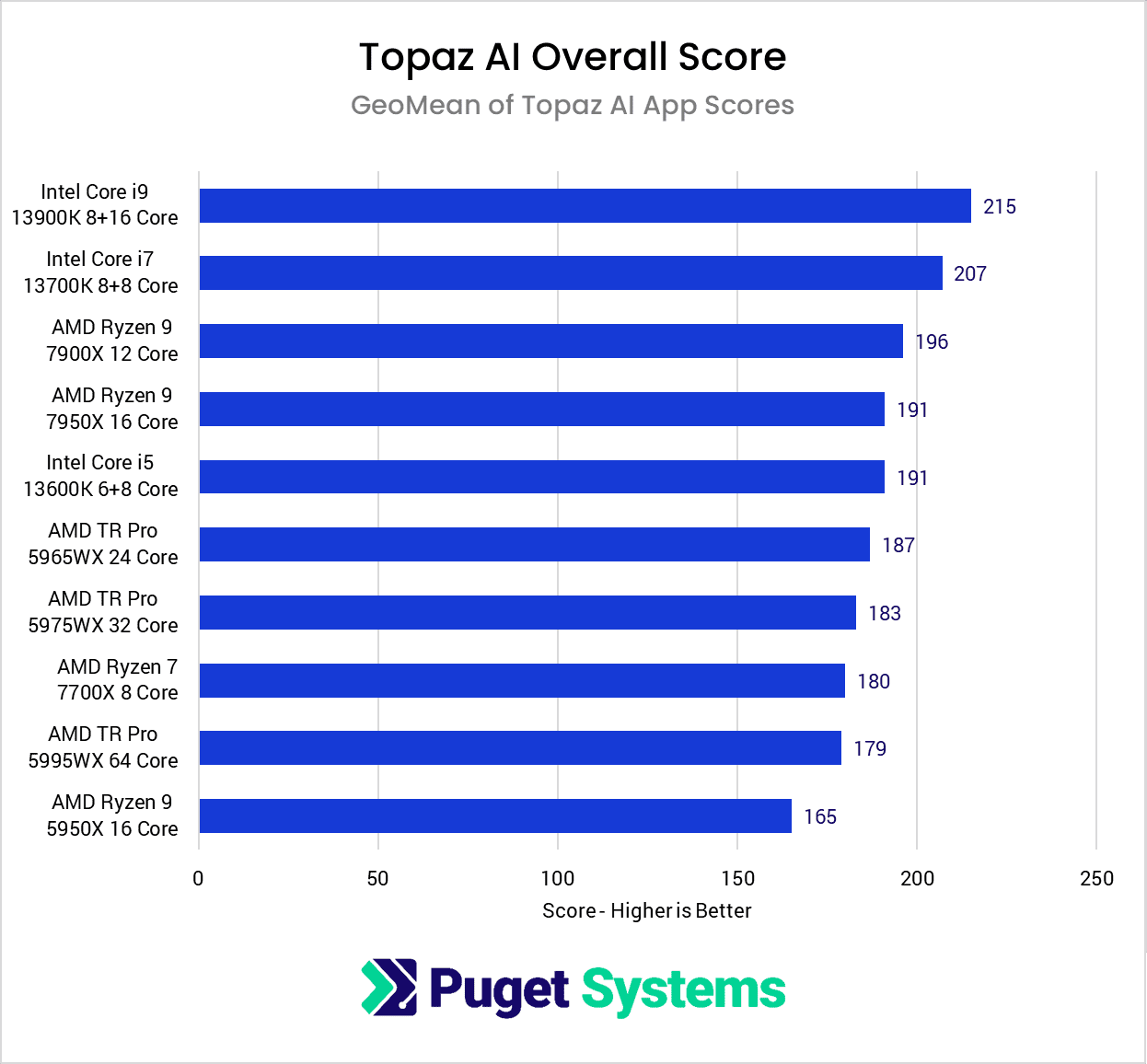

“Moving on to the GPU-based results, we did all our testing with the Core i9 13900K as that was the fastest CPU for every Topaz AI application.”

I hope we get some sort of timeline from Topaz Labs when they will be implementing a fix for GPU utilization. Maybe I am not understanding correctly, but every single measure I check for utilization of my 4090 it is not reading that it being anywhere close to fully utilized. I have my settings set to render with the 4090, yet during my exports, my AMD 7950X is getting slammed. Not sure what’s going on here, it feels like my settings are not taking affect. It would be nice to get some more advanced settings to better utilize my system and speed up these slow exports.

Yesterday I tested the latest Beta with the main Feature being performance improvements my 4090 was almost fully used giving me 0.08spf upscaling a 1080p video to 2160p.

very interesting. which build (Version) is it? Due to I have no beta access I’m using latest 3.0.12 and my performance/utilization is really not good.

Only 10fps when upscaling from 480p to 720p with Proteus “Auto” NVENC10. Also the benefit of 4 parallel streams is only moderate, because the framerate will be divided. Each stream only encodes with 2.5 -3 fps.

There must be also something like driver overhead. At the moment there is only running a single stream, 15% CPU for ffmpeg and 30-35% for “System” WTF!!!.

My Rig: Ryzen 9 3950X, 32GB RAM 3600MHzCL16, RTX 4090, Unlocked NVENC