Another observation. Rendering to 1080p shows almost no GPU utilization (4-8%) and similar performance in 480p-1080 and 480p to 4k.

There are only a few encoding options that actually can use the hardware accelerated encoders. In reality though, it is typically the models being used and those parameters that limits the export speed. I’ve been doing exhaustive testing on this in both ffmpeg directly and through Video AI and have found very few instances where it actually makes a measurable distance to use the hardware encoders when I’m applying sharpening or denoise filters which take longer to process than my computers take to encode each frame regardless of whether it is using a hardware or software encoder.

- H264 CBR is Software Encoding

- H264 VBR 1-Pass is Hardware Encoding

- H264 VBR 2-Pass is is Software Encoding

- H265 CBR is Software Encoding

- H265 VBR 1-Pass is Hardware Encoding

I reran my machines and updating results;

2:15 1080p file to 4k Proteus, Auto. 4068 total frames

Adding in performance per core

M1 8 core- 33:32, 2.02 frames per second (0.25fps per core)

M1 Pro 14 core - 18:01, 3.76 frames per second (0.27fps per core)

M2 Pro 19 core - 13:38, 4.97 frames per second (0.26fps per core)

I was under the impression that the M2 had a more powerful neural engine, but it doesn’t appear to be that way yet between the pros that are basically even after normalizing for core count, unless I’m missing something.

You can monitor how much the CPU, GPU and ANE are being utilized if you use the python tool asitop.

Basically just open a terminal and install using

pip3 install asitop

if pip3 isn’t linked then just use

pip install asitop

and then run using

sudo asitop

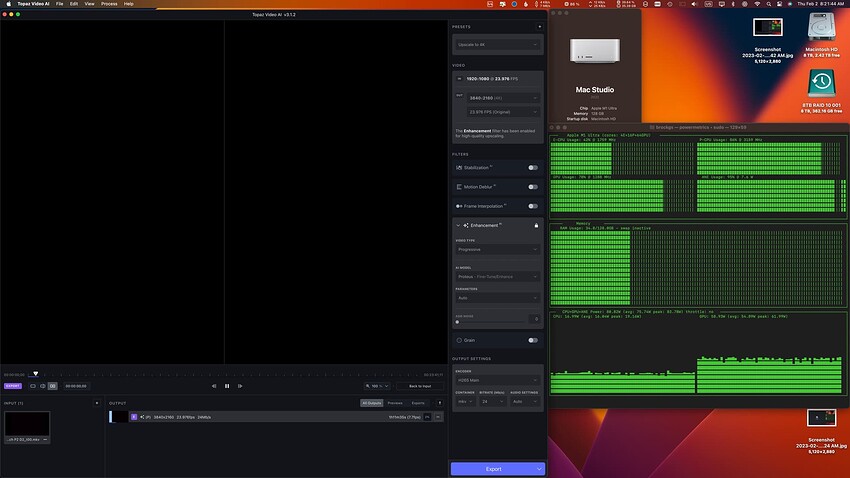

Here for example you can see that when I process a video from FHD to 4K using Video AI 3.1.2 that the GPU and ANE are almost fully being utilized, with the CPU still contributing quite a bit. Different models and encoding options will change the utilization, but this is the simplest way to monitor that. Below are two screenshots of my test right now.

Before I start exporting the video:

While I am exporting the video:

Thanks for sharing. If I may, how many GPU cores are on your MAX and Ultra?

- M1 Max 64GB, 8 performance CPU cores, 2 efficiency CPU cores, 32 GPU cores, 16 core Neural Engine

- M1 Ultra 128GB, 16 performance CPU cores, 4 efficiency CPU cores, 64 GPU cores, 32 core Neural Engine

Interesting.

So on a performance per GPU core;

M1 - 0.25

M1 MAX - 0.14

M1 Ultra - 0.10

So there’s some diminishing returns, or non linear scaling. The ultra is 2x Max but only 50% faster. Is it taking full advantage on the ultra when you process?

The M1 Pro to Max is a gain of 19% with 110% more GPU cores.

The M2 Pro performed 7% more than the M1 Max with 40% less graphics cores.

I’m interested to see if anyone has an M2 Max to bench and compare. Its seems at some point GPU cores matter less (I’m guessing the ultras extra neural are doing the heavy lifting).

Also I couldn’t get the asitop to work. It seemed to install but when I run sudo asitop I get “command not found”

Thanks for sharing, this has been an enlightening experiment. There’s not a lot of people benchmarking this software on Mac that Ive been able to see.

@stephen.grubb you’re absolutely right when it comes to FDH to 4K scaling. HOWEVER (![]() ) each type of job is different and shows difference performance/watt and/or performance/CPU,GPU,NeuralEngine core. For example when I’ve been doing DeNoise and Sharpen on FHD video with low quality video I get almost perfect 1:1 scaling of performance from M1 to M1 MAX to M1 ULTRA when it comes to GPU core count. Since the pipeline to run most jobs in Topaz Video AI touches on CPU, GPU and Neural Engines to different degrees, the performance anaylsis on the machines will vary quite a bit depending on the job.

) each type of job is different and shows difference performance/watt and/or performance/CPU,GPU,NeuralEngine core. For example when I’ve been doing DeNoise and Sharpen on FHD video with low quality video I get almost perfect 1:1 scaling of performance from M1 to M1 MAX to M1 ULTRA when it comes to GPU core count. Since the pipeline to run most jobs in Topaz Video AI touches on CPU, GPU and Neural Engines to different degrees, the performance anaylsis on the machines will vary quite a bit depending on the job.

Quite fascinating to look at really. If I had more time I would do a real deep dive and run as many permutations of jobs as I could across each of my machines to explore this. But…kids…work…life ![]() Maybe someday I can do this.

Maybe someday I can do this.

Interesting. This was one of the reasons I was considering holding off for a M2 Pro machine, but I settled for a 16" M1 Pro in the end as my old laptop would never be able to run VEAI. How do you measure Neural Engine performance?

ASITOP is the only solution, that I know. It was a pain for me to get running. Most of the instructions given (including in this thread) didn’t work when it came time to run. I’m sure I did something wrong, not linking it maybe, but ultimately to get it going I hold option in finder and click GO from the menu bar, select library and search for ASITOP (after following the install instructions above from Brock).

the (cpu/gpu upgraded) M2 Pro runs nice! noticeable step up from my base M1 Pro 14" (the binned 8c/14c), as would be expected.

yeah only just noticed the recommendation for Asitop. I installed and ran the command but terminal is asking for a password?

Your system password.

If it says command not found, click on finder, hold option and click the go menu, and head to library. Search for asitop in there.

Just enter your system password. ‘sudo’ before a command just tell the computer you want to run it as the root user which allows it to run with all the special privileges it needs to be able to access all the data regarding CPU, GPU, RAM and Neural Engine statistics. ![]()

Also, if it does say that command is not found, all you need to do is close the terminal window and re-open it. Sometimes when you install a command line application like asitop or others, that terminal “session” won’t actually know the path to where it is until you close out of that session and open a new terminal window where it will see the new path to the new program.

something still not working. I get this after entering my password

sudo: asitop: command not found

Blockquote

Argh, I opened up a real can of worms here! ![]()

I went back to my machine, create a Virtual Machine with macOS 13.2 and tried to install asitop and flailed around for a while, ultimately failing. Now, this could be because asitop won’t run in a virtual machine (which would make sense because it’s trying to access very low level data that might not really be there in the VM), the trouble is that I’ve installed so many other 3rd party packages and use a version of python that doesn’t come included with macOS by default so it’s hard for me to try and replicate the install process from start to finish the way that you are likely seeing it. ![]()

I’ll scratch my head some more and see if I can figure out a simple, guaranteed process to install and get working properly.

Hi! Thank you for your post.

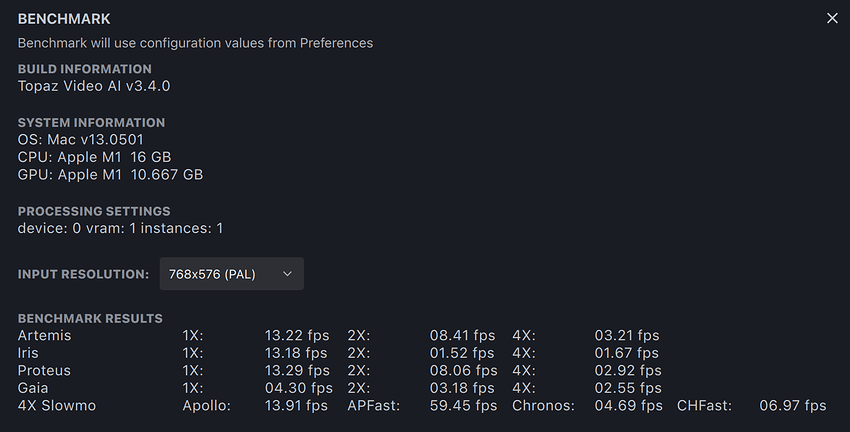

Can you post benchmarks using Topaz? I think it’s Command + B.

I have a Mac mini with M1 and 16GB RAM. Did a job for 30min deinterlaced PAL video 704x576p ~61Mb/s ProRes to 1440x1080p with square pixel. Proteus, with Relative to Auto and 20 Sharpen. H264 with target 8Mb/s (did auto, similar load results).

FPS was 7-8 with some 9-10 bursts here and there for the ~1hr40m. ffmpeg CPU load was ~200-300% and GPU was 25-40% and RAM 600-1200MB.

- What does the more than 100% CPU load mean? More than one core maxed out?

- Why did GPU cap at about 40%?

- Does ffmpeg (Topaz Video AI) use multiple CPU cores for a single job?

- With this being said, what is the bottleneck in my system?

- How much improvement will a M2 Pro have over my system

When upscaling SD sources on Apple M1 / M2, restricting Max Memory Usage to 10% (in TVAI Settings) gives significantly higher FPS and GPU usage around 95%.

Perhaps Topaz will eventually optimize the settings and performance on Apple Silicon for SD sources. Unless it’s Apple hindering them? It would be interesting to know.

Thanks.

Andy

Changing memory does nothing. Either with 1 or 2 processes. My FPS with that particular setting caps at about 8 or 4 and 4 if 2 processes are running. Lowering the memory actually lowered my FPS to about 7. CPU and GPU load did not go up. MacOS Ventura 13.5.1 TVAI 3.4.1.

I ordered a Mac Studio as I was due for upgrade anyway, 16GB RAM is not enough for my mini (living overseas so it will take a while to come from US) and I’ll test with the base M2 Max.

I hope they are already optimized or optimize for SD sources. I see no reason to upscale HD content when my $$$$'s TV can do that just fine. I am happy with 1080p sources on the TV, I just need to get a bunch of content to that level.

Don’t hold your breath too much.

TVAI doesn’t really scale well with Apple Silicon.

There are generally strange / „uneven“ things performance-wise and the speed up from a M1 Pro with 16 GPU Cores to my M2 Ultra with 60 Cores is also less than expected: more like 2.4x gain when there’s 3.75x GPU Cores, not taking into account the (only little) higher speed of M2 vs. M1.