Best update ever. Thank you very much!

Be sure to also check out the Product Update Post for this release which has a bunch of helpful tutorial videos and other clips that showcase all of the powerful new features in Video Enhance AI!

When will this version be listed in downloads on your website?

It’s been updated now, thanks for the heads up

How does the Chronos AI model compare to RIFE neural network frame interpolation for increasing framerate? I’ve been using RIFE via the Flowframes app.

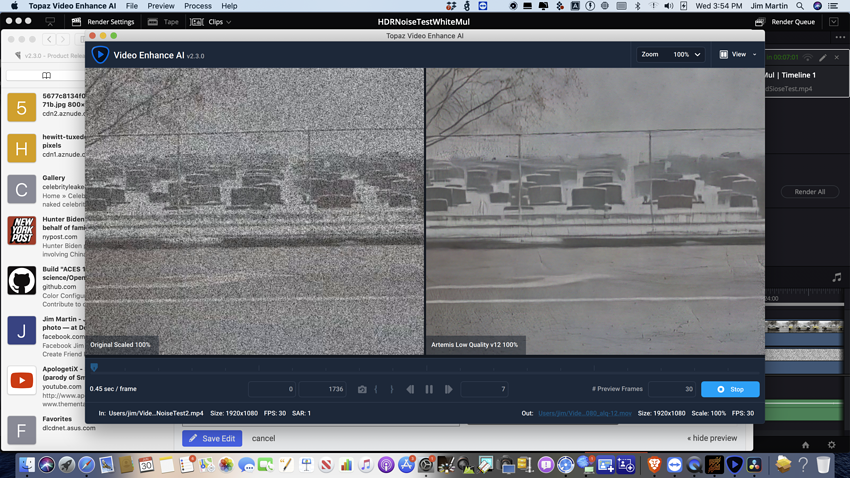

Just Uploaded Files for Noise Section.![]()

In general, RIFF is faster doing 2x/4x, Chronos produces better quality and is flexible for any scale up to 20x. But they all depend on the input videos.

It doesn’t look like you’ve run the preview.

That’s not noise, that is half the pixels of an image. lol

It looks more like a artistic style than digital noise. There is no chroma noise only luma noise and the grain size is the size of the fist. That does not look like a typical noise patter on digital camera, so I imagine it is not treated as one. If you want to fully get rid of “noise” you would either have to reconstruct 50% of the image, or go the old school way and just apply blur to the image. But I would not use this as the test image for noise reduction is all I’m saying.

That is literally the noisiest footage I’ve ever seen (you might as well be upscaling TV random white noise). You’re expecting too much, they’re not gonna train the AI for unrealistic noise levels like that.

The Luma Noise is Static .Low Quality Video Setting Removed the noise Nicely The New Model with Adjustable Could noT.

Proteus looks very impressive in the video demo. I was not able to generate as much texture detail as in the tiger video, using the beta. Was there improvement to the model between the last beta and this release?

There was. We typically try to give the models as much time to train as possible, and will package them at the last minute before a release. So this version has about a week’s worth of extra training compared to the last beta version.

You get my samples ?

Bishop, how about to enable the application to get additional trainings data when used at the users end? That could safe a lot of trainings time on the companies end and improve the trainings data for all continously while it is being used at the customers end. ![]()

How would that work? As far as I know, to train an AI you need the high quality reference result along with the thing you want to reconstruct/improve. Normally users only have the latter of those. And even if users had both the original high quality source footage and the bad one, why would they want to waste their resources on training an AI? Isn’t this why we pay for this software - to have other people deal with this? Why as a paying customer would I have to increase my electricity bill to train the AI?

Not sure if this has been discussed before but I’ve noticed that Proteus doesn’t deinterlace SD videos. The tutorial makes it seem that Proteus can be used with SD video immediately but I don’t think so based on the interlacing.

Perhaps the next update can include deinterlacing in Proteus the way Dione does so nicely.

Dione is still my go to model for SD videos though I haven’t seen a noticeable improvement in this model for many months.

None of the models appear to be able to improve significantly on super 8 mm film or 1980s-1990s vhs transfers.

Thanks for the new version of Video Enhance.