Finaly someone here, who has realy a reason to use AI, and a Source that i realy consider as Low Quality.

Good job so far

I like that you look out, to keep gradients & shadows (or better: increase) on the cheeks,necks. Those are very important parts.

Getting out Detail from blonde hair, is harder as from dark one, on the other hand, if the dark hair is in the shadow (like in the second Sample Image from you) then it can get tricky. (You can try to trick the AI, by changing the Contrast & Brightness so that Video AI sees more. If it worked, you change both to the old values back)

I got some nice results with blonde hair @ extreme blocky source when i used Proteus V3 first @ Original Res with VideoAI 2.6.4 and then processed the Output from that again with Proteus V3 @ 2x.

First Step was to deinterlace the complette File.

QTGMC(Preset="Slow", ShowSettings=false, TR2=3, EdiThreads=3,\

GrainRestore=0, SLMode=3, Sharpness=1, Border=True,\

NoisePreset="Medium", NoiseProcess=1, ChromaNoise=false,\

NoiseRestore=0.7, Sigma=1.5, NoiseDeint="Generate", StabilizeNoise=true,\

FPSDivisor=2, ShutterBlur=0, ShutterAngleSrc=0, ShutterAngleOut=0,\

Lossless=0,SourceMatch=0, MatchPreset="Medium",\

MatchPreset2="Medium",MatchEnhance=0.5)

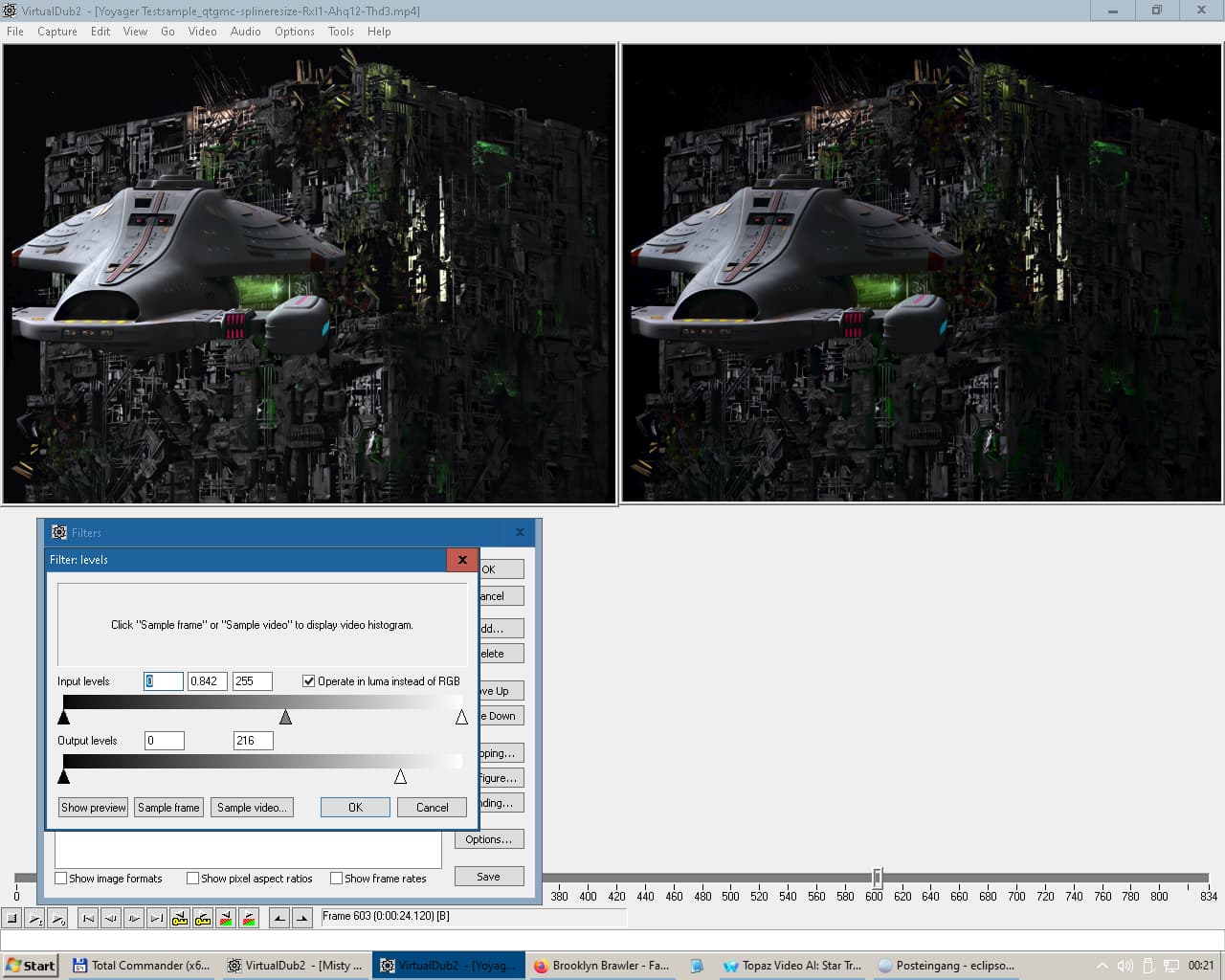

Then Post Processing (was needed, because at many points there are so extreme blocking artefacts+Mosquito Noise that Video AI alone is not enough)

of the Complette File.

Deblock(quant=24, aOffset=2, bOffset=0, planes="y")

MosquitoNR(strength=14, restore=128, radius=2, threads=0)

LimitedSharpenFaster(strength=60,wide=false,overshoot=1,undershoot=1,edgemode=1,ss_x=1.5,ss_y=1.5)

LimitedSharpenFaster(strength=40,wide=false,overshoot=1,undershoot=1,edgemode=2,ss_x=1.5,ss_y=1.5)

Board Software did convert the PNG Screeshots all to jpg.

Screenshot directly from DVD Source: Normal yadif Deinterlacing 25fps Discard Bottom Field) Horrible Blocking Artefacts (very bad Encoded. Most b Frames look better as the p Frames ! Normal it should be the other way, but the guy who encoded the DVD used very bad Settings) the more you zoom in, the worse it gets.

704x576 Anamorphic

Step1 | 76 Frames Cut Out with AviSynth+

MosquitoNR(strength=16, restore=0, radius=2, threads=0)

FFT3DFilter(sigma=0, plane=0, bt=3, bw=32, bh=32, ow=16, oh=16, sharpen=0.0, dehalo = 0, sigma2 = 0, sigma3 = 2, sigma4 = 2)

Step2 | Output from Step 1 to Video AI 2.6.4

Protheus v3 100%

100,20,0,30,10,0

Step3 | Output of Step2 in Video AI 2.6.4

Protheus v3 200%

30,50,0,0,10,-10

Step4 | Output of Step3 to AviSynth+

FrameNuum = last.FrameCount # Why ? See at bottom from Post *

DeleteFrame(FrameNuum - 8) # Why ? See at bottom from Post *

LimitedSharpenFaster(strength=50,wide=true,overshoot=5,undershoot=1,edgemode=2,ss_x=1.5,ss_y=1.5)

f3kgrainPlus(luma=200, chroma=400, mode=1, temp=60, adapt=65, sigma=1.0)

Done ! 2 x Anamorphic Pure,Extreme,Horrible *hit to Gold

Screenshot directly from DVD Source: Normal yadif Deinterlacing 25fps Discard Bottom Field. A portion with ““Good Source”” xD Quality

Zoom it and you will see the mess

704x576 Anamorphic

Step1 | 970 Frames AviSynth+ Cut Out to Protheus v3 200% | In Rare cases scale directly to 2x work better.

70,30,0,3,15,0

Step2 | Output of Video AI 2.6.4 to AviSynth+

MosquitoNR(strength=11, restore=128, radius=2, threads=0) # Helps vs Hair Shimmer

LimitedSharpenFaster(strength=60,wide=true,overshoot=1,undershoot=1,edgemode=2,ss_x=1,ss_y=2)

Done ! 2 x Anamorphic *hit to Gold

It’s shocking how good Video AI with Proteus V3 can be (and how much detail can be exracted, from a blocky source) especialy in combination with AviSynth.

_* Reason for “DeleteFrame(FrameNuum - 8)”

If 2.6.4 gets a Input that was a Output from 2.6.4 it will duplicate one Frame (8 Frames before the last Frame) and eat up the last one.

So the Cut must be 77 Frames, by duplicating the last Frame (because 2.6.4 will eat the last one) and the Dupe Frame (8 Frames before the last one) must be deleted.

I did the duplicating of the last frame with AviSynth and my Tool (which creates the cuts) before i exported the 76 Frames Cut. That’s why just Delete Frame is visible here.

pssss: Video AI screwed up one Eye at the guy from your samples. It’s the same problem with Photo Ai and Giga Pixel. It’s most times the same. One Eye is good, one get’s distorted - Eye lids change angle. Make it better first without upscaling can help, because of Contur repositioning. After it’s better, uspcale by 2 x Steps will do most times a better job in keeping the Contures at the right position)