I suggest an AI video product to convert 2d to 3d Half Side By Side using MiDaS 3 AI MIT large model

to convert 2d plus depthmap to stereoscopic HSBS 3d

input 2d video output HSBS 3D video or anaglyph 3d video

How exactly did you do that? Did you convert every picture?

Greetings Stefan

Yes I extracted frame sequence run python midas 3 to create a grayscale depthmap frame sequence created a depthmap video

With ffmpeg combined 2d video with depthmap video then used tridef media player to decode left right stereo view half side by side

Video in realtime recorded it with a 1080p HDMI hardware capture device

And It takes me about 2 to 3 days to convert a 90 minute video from 2d to 3d using Cuda MiDaS 3 with python

But ideally I’d imagine it would be possible to create the depthmap then convert it to right stereo view then step to the next frame so

It progressively builds the final half side by side video decoding the depthmap can be done

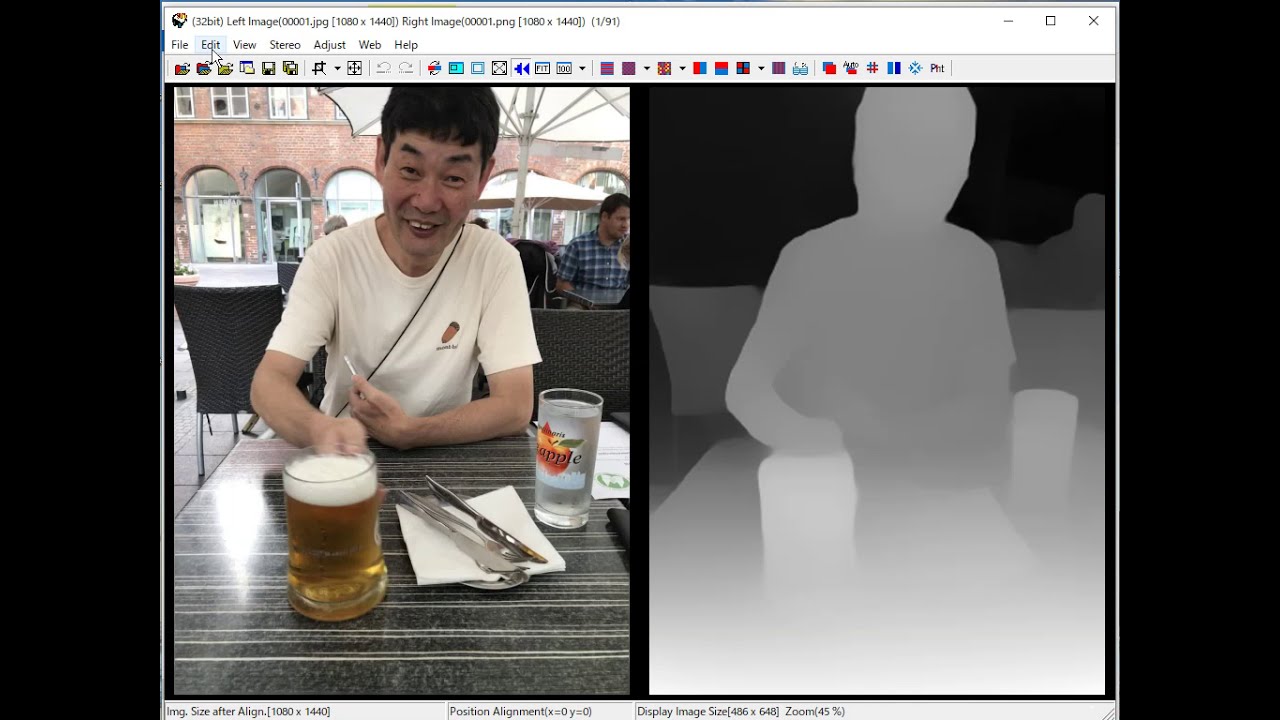

As shown by stereo photo maker a free program by Masuji

https://stereo.jpn.org/eng/stphmkr/

2d to 3d Demonstration Stereo Photo Maker

Also stereoscopic player can decode 2d plus depthmap in to left right stereoscopic view

Yes this is a great request, please video enhance ai, please consider making a product that has these features. many of us would love to convert our 2d to 3d. the current 2d to 3d converters on the market dont look good.

This would be a great addion to VEAI.

I have experimented with this too also using MiDaS and some other tools, just like OP demonstrated. The results for stills can be good in VR goggles sometimes, but it is not very robust for video where we generate the stereo images frame by frame.

The problem is temporal coherence in the depth maps from frame to frame. Treating each frame individually results (generally) in a lot of flicker in the depth map (if you play the depth map images as a video itself this is clear). The transformed stereo perspective flickers following the luminance flicker in the depth map. For instance, I tried to apply this to video from a camera doing a dolly move near several skyscrapers. I thought it would be a very immersive shot but the depth map flicker made the buildings look like they were vibrating and wobbling all over the place, it gave me nausea very quickly and I usually have no problem spending hours in VR.

There are probably some clever tricks to average across many frames of depth map information, maybe using multiple passes similar to what VAI may be doing with some of the stabilization modes.

As much as I would like to see this happen I know it is a very limited niche and doubtful Topaz would be able to justify the work… Broader 3D VR adoption has always been “any day now” for decades, but despite dropping costs and improving tech, very few people own 3D equipment and even fewer have a persistent interest in it. May not be a good business case.