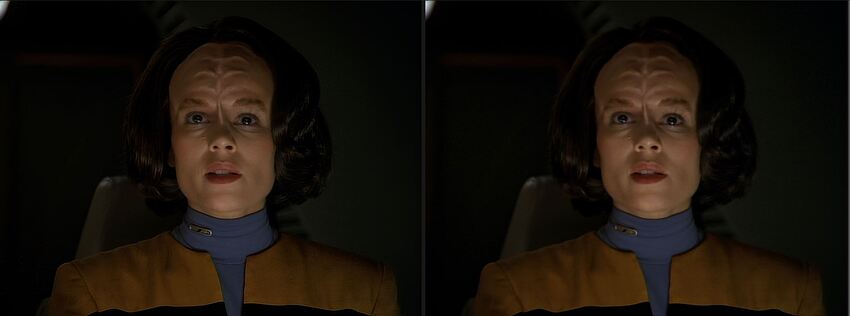

Hmm, I seem to be seeing other artifacts than you.

The left picture has great texture but horrible edges. The right poor texture (blurry) but great natural looking edges.

Even the background in the left (sharpened) image seems to have gotten rid of the “pixelated web of dark gray-to-black patches” that are so common for almost black background in analog → 8bit AVC1 quantization. It looks smooth where it should and sharp where it should (except for the edges).

The over-sharpened edge problem in the left picture seems identical to the Gigapixel flaw that renders the “standard” profile useless. It not only has severe edge ringing and color bleeding, but it also surfaces very noticeable “edge shadows” (like echoes of edges) as far away as 5-10 pixels from edges. It’s particularly noticeable at the center of the actress’ neck, just above the collar.

But aside from the edges, I think the rest of the picture looks very good and natural.

It’s strange that Topaz hasn’t found a general solution to this problem since it plagues all of the same generation of models (gigapixel and video). What I do for gigapixel is render one low-res and one high-res image. Then use edge detection to create an edge mask, expand the mask by a few pixels, alpha soften the mask’s edges and then blend the low-res image onto the high res using that mask.

That same easy trick should be trivial to have the Topaz products do automatically for the user. Yes, it’ll take 2x the rendering time, but the result is the difference between usable and unusable.

Now, that digression aside, I’m still not sure I understand what you mean by pixel clusters. Do you mean areas of high frequency perhaps? Or low frequency?

EDIT. I think I might understand what you mean after having cropped and center-aligned each still in a separate image and doing real A/B toggling between them. I see that the left image has misinterpreted noise as texture. Especially the actress’ lower left uniform (to the lower right in the image), where it boosted the noise frequency creating something that I suppose could be called “pixel clusters”. Is that what you meant? If so, it starts making sense. It’s the same problem as Gigapixel has for the standard and high models (profiles).

Actually it does. If you look at the edge separating the black and yellow part of her uniform, or the top of her forehead and her hair, it’s clear “halo” effects there (still, in present versions).

So Dehalo gets rid of the frequency spikes where noise is incorrectly interpreted as textures to be sharpened (like the smooth yellow gradients on her uniform in the right image), but leaves the edges alone, including the halos present in the image to the left?

As for the “plastic look”, yes, the more I look at those pictures in detail I see some of that on her forehead where she has what should clearly be soft ridges (bumps), but where the sharpness knob seems to have made model decide that the gradient crossed some discretion boundary for “sharp features” and decided to raise the frequency in those areas, resulting in a very artificial look there.

PS. What episode is that still from? I think my son has the DVD collection, and I found that particular scene still looking rather promising for experimenting with these knobs.