@profiwork

Can confirm your findings.

As a side effect of my problems Quality of Gigapixel several users, including me, mentioned the problem too.

Hope that you get a feedback on your Request.

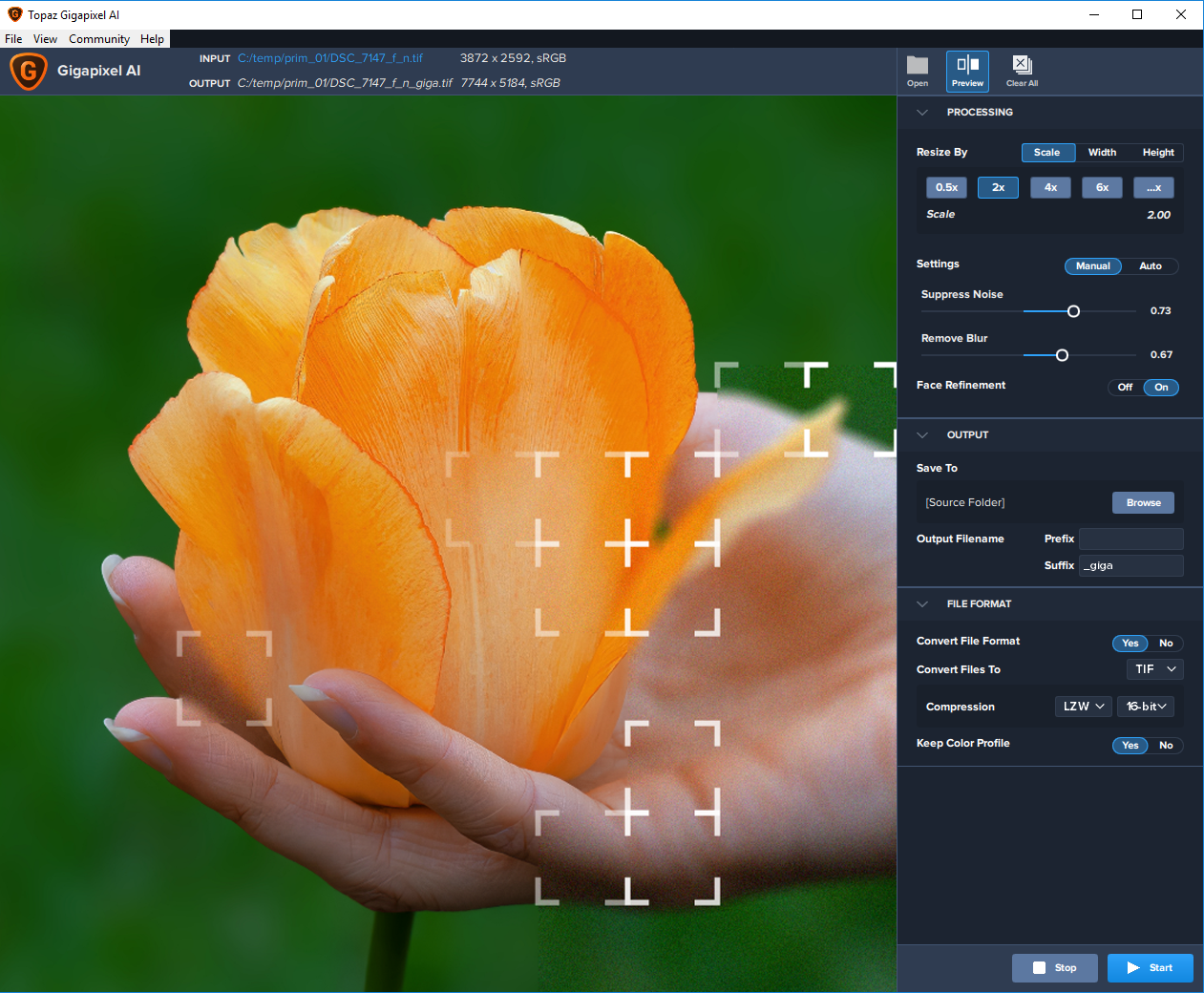

Look at this picture too see my result on my picture with preview. The result is between Original and preview shown in Gigapixel, but the preview is significant better than the result.

@profiwork

The problem is the preview is build on a tiny part of the picture and this looks better than the whole picture AI scaled.

I used a cropped version of your picture

If I upscale this one 6 times the eye section look like the preview and much better than the same section of the whole picture.

upper left whole picture 6x upscaled

upper right cropped picture 6x upscaled

lower left Original Gigapixel view

lower right Gigapixel preview

After testing for both speed and quality for the optimum result I’ve settled on preferences of:

Use Max Quality AI Models - No

Enable GPU - Yes

Graphics Memory - Medium

Enable OpenVino - Yes

I have found significant difference between the preview and the processed picture in Gigapixel for human faces. Below is a preview crop then the processed 4X pictures with Maximum quality on and off. Face refinement was on for both and the GPU (Radeon RX580 4GB) was used for all. Halloween photo.

Preview:

Max Quality On:

Max Quality off:

@andymagee-52287

Thanks for the tip, can confirm the quality is better with this settings.

Still not as good as the preview but I guess this can’t be fixed, only preview could get ‘worse’ to match the real output?

This of course will be like a joke, but I decided to do the work of creating crutches that fix this program bug.

- First, I record an uncompressed frame-by-frame video from the preview region.

- Then I break this video into separate TGA files using “VirtualDub”.

- Then I will convert these files to TIFF.

- And I glue a series of shots as a panoramic shot using the “microsoft image composite editor”.

- I add sharpness and focus using “SharpenAI” in the stabilized mode.

AND RESULT

If you think that the developers rushed to fix this long-standing bug, then you are bitterly mistaken. I am fighting the managers handling my bug fix request. So far they are trying to convince me that I offer insufficiently focused images or non-RAW files that is the reason for not worked Gigapixel AI. But I would say, for that time of the creation (2006-2009) and equipment (Nikon D80) gave of decent image quality. If the images were better, then I would not need programs from Topaz Labs.

I can’t use any of the three products I bought, all of them have serious drawbacks that do not allow normal use even for processing a family photo. What can we say about commercial use where impeccable output quality is required.

You can speak out in this thread and I will try to convey your words as a petition to fix this old bug that wanders from topic to topic and has not yet been resolved. By the way, judging by the correspondence, I got the impression that none of the developers are reading this forum.

As I get free time, I will create new requests for fixing bugs. At this time request processing time from the moment of creating the request to the final response from the developers takes about 20 days. I have already accumulated about a dozen bugs found. I think for a year we will manage to cover these requests and (just don’t laugh) based on the standard formulas for calculating personnel management, correcting these requests at the current speed will take another three years.

I experience similar results now and then. In general using the CPU generates better and sharper images. Maybe the GPU lacks of calculation precision or something?!

Perhaps the CPU gives a slightly sharper image, but this is not what they promise in the preview.

From correspondence with developers, I understand a little what the problem is. And it is not solved by their methods, over the next five years at least. I proposed that they add a switch for a new processing mode - render the image, with multi-threaded feeding it in small pieces into the jaws of AI.

This will work, I have already tried and showed it for all. But developers without enthusiasm want to add this simple function where everything is ready for it. No one contacted me for clarifying the idea. You can look at how I imagine it, but I have not finished modifying the idea of the interface, I just decided to show you.

In fact, there is not one problem, but several problems made that result. But I need free time to finish up examples of errors for submitting a request for their correction.

I don’t know, but I wonder if Topaz has outsourced it’s coding to China (or similar)?

Some of the mistakes that show up in various Topaz products show a lack of fully understanding English.

Example being where Studio 2 had two ‘Looks’ that were both the same and were labelled as ‘Stain Glass’.

“Stained Glass” would obviously be much better (and the name of the ‘Look’ was eventually changed, and reduced to just one copy too), but the lack of English ability to allow thinking that “Stain Glass” was OK shows that English is not a major part of the Topaz vocabulary.

Maybe that’s why some of these long going faults are not getting fixed?

I noticed that the quality of the preview also sometimes changes when I move the view point only a little bit. This can change from quite blurry to really nice sharp with nearly the same preview.

![]()

interface

I had a little time to finish my vision of the interface. I tried to transfer from other programs from Topaz Labs portions of the interface that are needed by this program but are missing for some reason.

At the same time, I added functions that fix the biggest bugs and weaknesses of all AI programs from Topaz Labs. It remains only to reach out to the developers for this to be done.

I will now little by little add requests to fix that painful set of bugs that should have been fixed before the release of programs to the world.

Having just tested this software again after giving up back on version 4.1.2, it’s looking worse than ever and the output still doesn’t match the preview, no matter what your settings. CPU is better than GPU, but both are nothing like the preview. From a quick review, v4.4.5 appears to be even less accurate than 4.1.2. This software is a joke and the folks at Topaz should feel ashamed for not being honest. Do they not see that the output and preview don’t match? Why can’t they? What exactly is the problem here?

And for reference, i’ve been a retoucher for 25 years, with clients which include many of the world’s largest brands. I understand very well how to use software and to judge the quality of an image.

Since I am a fan of neural networks and have received a few words in correspondence from developers, I approximately understand the essence of the problem. Although I don’t understand why they didn’t immediately do what I propose to do for all their AI products. The main problem is that they do not train neural networks with pictures larger than 512 pixels. This means that their AI algorithm works only for small pictures in 256x256 or 512x512 pixels. So now they just stretch the picture, with the quality level of any free application, and show on small advertisements how well their algorithm works.

I suggested cutting the incoming large picture into small ones, and even showed how to arrange it in the interface, and showed that you can get the final picture of the preview window level connected from a set of cut ones. But the managers who process incoming requests for bugs do not seem to understand that this can be done without changing the AI algorithm by the hands of one programmer for one week.

Actually more scary, the numbers on the buttons for enlarging and reducing the picture in their interface. Of course, I understand that I’m too old because I know the theory of image resizing algorithms. But an increase in x6 or a decrease in x0.2 is equivalent to a call to kill the remains of good useful data in my picture in the current implementation of image processing.

That explains why the preview window is so small and why making the image smaller before processing often helps getting better results. It also comes close to my assumption that the pixel density and the detail density should match to enable the alorithm to work properly. maybe an image splitter helps: Cut photo into equal parts online - IMG online

That as you described, I consider a bug. The designer should not think about whether the picture matches the algorithm sufficiently for processing. To do this, I added the Auto Focus function in my vision of the interface. It automatically resizes the incoming image to the optimal density of details in the image. The humor is that, only developers know their algorithm and where is its optimal detail density for effective work. And this had to be done before the release of all their AI programs.

I made some experiments with cutting an image into a lot of pieces before processing, but after that I can not merge them to a photo again. Photoshop needs some sort of an overlapping to get the pieces together but the pieces don’t have.

Photoshop and other applications can do cut with overlapping. Use the Slice tool with different parameters twice to get a set of pictures with overlapping. And if you have not noticed, above in the text I recommended another application for stitching images:

- And I glue a series of shots as a panoramic shot using the “microsoft image composite editor”.

Well, that does not work. A regular image with so many megapixels is so big and the slices are so small that Photoshop can not get them together anymore no matter if they overlap. ![]()

- In PS: view → New guide layout

- Select Slice tool

- Click to Slices from Guides button

- Export for web PNG

- Repeat with other parameters

- Gigapixel AI

- Stitching images in “Microsoft image composite editor”

Although I do not really like Photoshop in terms of PNG or JPG Export for Web. It uses lossy compression as I recall. Photoshop does not know how to make a JPG without losses.